One of the best selling video cards, but also surrounded by controversy, yet still with no doubt it still is one of the best you can get right now, especially this custom variant from Gigabyte which in plains terms, it kicks a$$ !

First about the company:

Gigabyte Technology Co., Ltd. is an international manufacturer and distributor of computer hardware products and was established in 1986 by Pei-Cheng Yeh.

Gigabyte is the largest motherboard vendor, with shipments of 4,8 million motherboards in Q1 2015, while ASUS shipped around 4,5 million motherboards in the same quarter. Gigabyte was ranked 17th in “Taiwan’s 2010 Top 20 Global Brands” by the Taiwan External Trade Development Council.

Gigabyte designs and manufactures motherboards for both AMD and Intel platforms, and also produces graphics cards and notebooks, with AMD and Nvidia GPUs, including the GeForce GTX 980 and the Radeon R9 290X.

Other products of Gigabyte also have included desktop computers, tablet computers, ultrabooks, mobile phones, personal digital assistants, server motherboards, server racks, networking equipment, optical drives, computer monitors, mice, keyboards, cooling components, power supplies, and computer cases.

Price when reviewed: £ 292.23 – via Amazon.co.uk

And at the date of this review, you will get the Witcher 3 game as well, for free, with any GTX 9xx card, while the offers lasts from approved vendors.

Gigabyte NVIDIA GTX 970 G1 Gaming Edition Gaming Graphics Card (4GB, PCI Express, 256 Bit)

Presentation

Let’s talk about the Maxwell architecture which composes the GTX 9xx family, being the latest (2nd) iteration to date.

It introduced several new technologies:

Dynamic Super Resolution

It renders the game at a higher resolution and shows it in a lower one, this brings more quality to that lower resolution.

In general DSR works like downsampling, but it has simple user on/off control. Textures will now be sampled at higher resolutions that equates to more pixels being sampled.

Nvidia VXGI (Real-Time-Voxel-Global Illumination)

VXGI is the next step in lighting for gaming and, in simple wording, a new real-time global illumination engine technology. Global illumination is often used in the movie industry to produce CG scenes. VXGI is based on using a 3D structure (Voxels) to capture coverage and lighting information at every point in a scene. This data structure can then be traced during the final stage of rendering to precisely determine the effect of light bouncing around in the scene. VXGI provides improved performance for global illumination. This software algorithm will only work with Maxwell GPUs.

Multi-Frame Sampled Anti-Aliasing(MFAA)

However support for Coverage-Sampling Anti-Aliasing(CSAA) was removed.

It gives the same quality as 4x MSAA but has only the performance costs of 2x MSAA. It is based on a Temporal Synthesis Filter with coverage samples per frame and per pixel

HDMI 2.0 support

HDMI 2.0 is the successor to the HDMI 1.4a/b standard that we pretty much all use in this day and era. The primary reason to bring 2.0 support to Maxwell is that 4K Ultra HD televisions require more bandwidth to operate at their full potential. 4K Ultra HD is four times the resolution of 1080p and therefore UHD needs more throughput to manage that extra data going back and forth

DirectX 12

The DX12 release of DirectX will introduce a number of new features for graphics rendering. Microsoft has disclosed some of these features, at GDC and during NVIDIA’s Editor’s conference. Conservative Raster, discussed earlier in the GI section of this paper, is one such DX graphics feature. Another is Raster Ordered Views (ROVs,) which gives developers control over the ordering pixel shader operations. GM2xx supports both Conservative Raster and ROVs. The new graphics features included in DX12 will be accessible from either DX11 or DX12 so developers will be free to use the new features with either the DX11 or DX12 APIs.

Others include:

VR Direct

Multi-Projection Acceleration

Third Generation Delta Color Compression

Multi-Pixel Programming Sampling

Second generation Maxwell also changed the ROP to memory controller ratio from 8:1 to 16:1.

However, some of the ROPs are generally idle in the GTX 970 because there are not enough enabled SMMs to give them work to do and therefore reduces its maximum fill rate.

Second generation Maxwell also has up to 4 SMM units per GPC, compared to 5 SMM units per GPC.

GM204 supports CUDA Compute Capability 5.2 compared to 5.0 on GM107/GM108 GPUs, 3.5 on GK110/GK208 GPUs and 3.0 on GK10x GPUs.

Maxwell second generation has an upgraded NVENC which supports HEVC encoding and adds support for H.264 encoding resolutions at 1440p/60FPS & 4K/60FPS compared to NVENC on Maxwell first generation GM10x GPUs which only supported H.264 1080p/60FPS encoding.

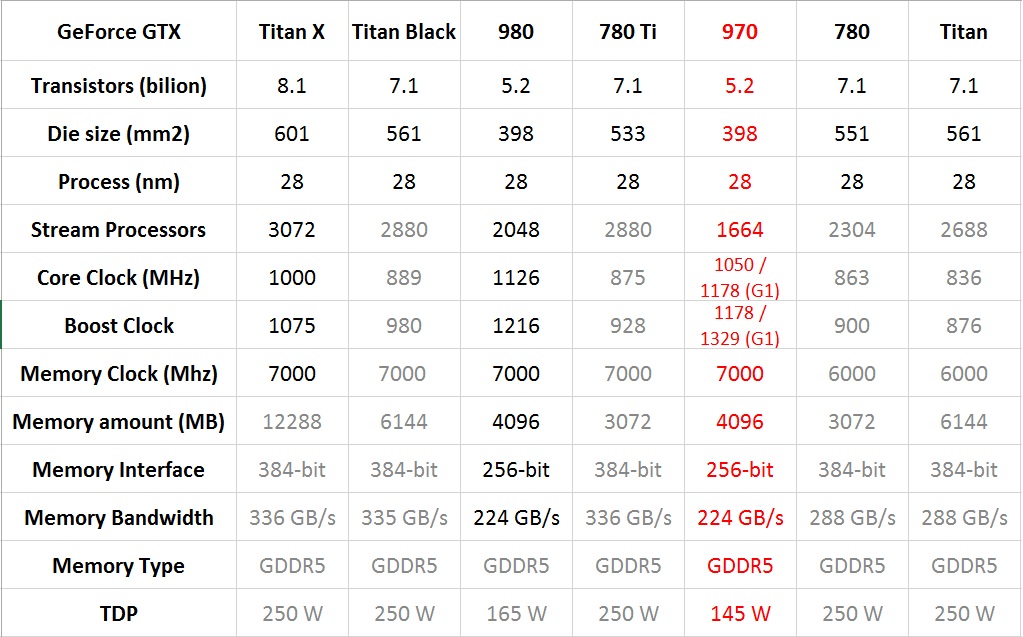

Moving to the the GTX 970.

GM204 is the GPU name for the GTX 970 which has 5.2 billion transistors, on a 398mm² die size.

Then 1664 CUDA/Shader/Stream processors are used, there are 128 CUDA cores (shader processors) per cluster (SMM), 16 SMM, 16 Geometry units, 104 Texture units, 56 ROP units, and a 256-bit GDDR5.

Regarding frequencies we have:

OC Mode

GPU Boost Clock : 1354 MHz

GPU Base Clock : 1203 MHz

Gaming Mode

GPU Boost Clock : 1329 MHz

GPU Base Clock : 1178 MHz

For a better view, here it is compared with others.

Before we go further let’s explore the controversy with the GTX 970.

Via Anandtech which presents it in depth.

http://www.anandtech.com/show/8935/geforce-gtx-970-correcting-the-specs-exploring-memory-allocation

In brief, various GTX 970 owners had observed that the GTX 970 was prone to topping out its reported VRAM allocation at 3.5GB rather than 4GB, and that meanwhile the GTX 980 was reaching 4GB allocated in similar circumstances. All of this was at odds with what we thought we knew about the cards and the underlying GM204 GPU, as the two cards were believed to have identical memory subsystems.

In their statement, NVIDIA confirmed that this unusual behavior existed, and that it was due to the GTX 970 possessing “fewer crossbar resources to the memory system” than the GTX 980. Furthermore due to this, the VRAM on the GTX 970 was segmented in a way it’s not on the GTX 980, with 3.5GB of the 4GB serving as one high priority segment, and the final 512MB serving as a low priority segment. Which leads to the reported performance degradation when an application needs to access more than 3.5GB. The rest of NVIDIA’s statement then focused on performance, and why they believed this design would not have a significant performance impact versus a GTX 980-style non-segmented memory structure.

When the GTX 980 and GTX 970 were released, NVIDIA provided the above original specifications for the two cards. The launch GTX 900 GPUs would be a standard full/die-harvested card pair, with the GTX 980 using a fully enabled GM204 GPU, while the GTX 970 would be using a die-harvested GPU where one or more SMMs had failed. As a result of this the big differences between the GTX 980 and GTX 970 would be a minor clockspeed difference, the disabling of 3 (of 16) SMMs, and a resulting reduction in power consumption. Most important for the conversation at hand, we were told that both possessed identical memory subsystems: 4GB of 7GHz GDDR5 on a 256-bit bus, split amongst 4 ROP/memory controller partitions. All 4 partitions would be fully active on the GTX 970, with 2MB of L2 cache and 64 ROPs available.

This, as it turns out, was incorrect.

As part of our discussion with NVIDIA, they laid out the fact that the original published specifications for the GTX 970 were wrong, and as a result the “unusual” behavior that users had been seeing from the GTX 970 was in fact expected behavior for a card configured as the GTX 970 was. To get straight to the point then, NVIDIA’s original publication of the ROP/memory controller subsystem was wrong; GTX 970 has a 256-bit memory bus, but 1 of the 4 ROP/memory controller partitions was partially disabled, not fully enabled like we were originally told. As a result GTX 970 only has 56 of 64 ROPs and 1.75MB of 2MB of L2 cache enabled. The memory controllers themselves remain unchanged, with all four controllers active and driving 4GB of VRAM over a combined 256-bit memory bus.

This revelation significantly alters how we perceive the performance of the GTX 970 in certain situations, and is the missing piece of the puzzle in understanding the memory allocation issues that originally brought all of this to light. The ability to “partially disable” a ROP/memory controller partition is new to Maxwell, and we’ll fully explore how that works in a moment, but the important part to take away is that the ROP/MC layout on the GTX 970 is not fully enabled like the GTX 980, and as a result will not behave identically to the GTX 980. All of the behavior from the GTX 970 we’ve seen in light of this correction now makes sense, and it is immediately clear that this is not a hardware or software bug in GTX 970, but rather the planned/intentional behavior of the product.

So the memory is split in two segments of 3.5 GB and 512 MB, with a memory bandwidth of 196 GB/s and 28 GB/s, respectively.

The biggest and most painful question about all of this then is how did this happen? How did we get fed incorrect specifications? NVIDIA’s explanation, in a nutshell, is that this was completely accidental and that all of this stems from assumptions made by NVIDIA’s technical marketing team.

Ok, so much for the technical specs and the controversy, let’s see it in hand.

Packaging and content

So, Nvidia ‘brags’ how efficient and green Maxwell is, so we thought this would be a nice decor to match that statement.

The box design from Gigabyte is very nice, with an all-seeing eye and minimalistic approach.

On the back, all the details and features presented.

Inside, a black box, with the name of the product.

Opening it, there it lays the GTX 970 G1, protected by a lot of foam and an antistatic bag.

Underneath it, the accessories.

– 1x CD with drivers

– 1x Booklet

– 2x 4pin MOLEX to 6 pin adapters

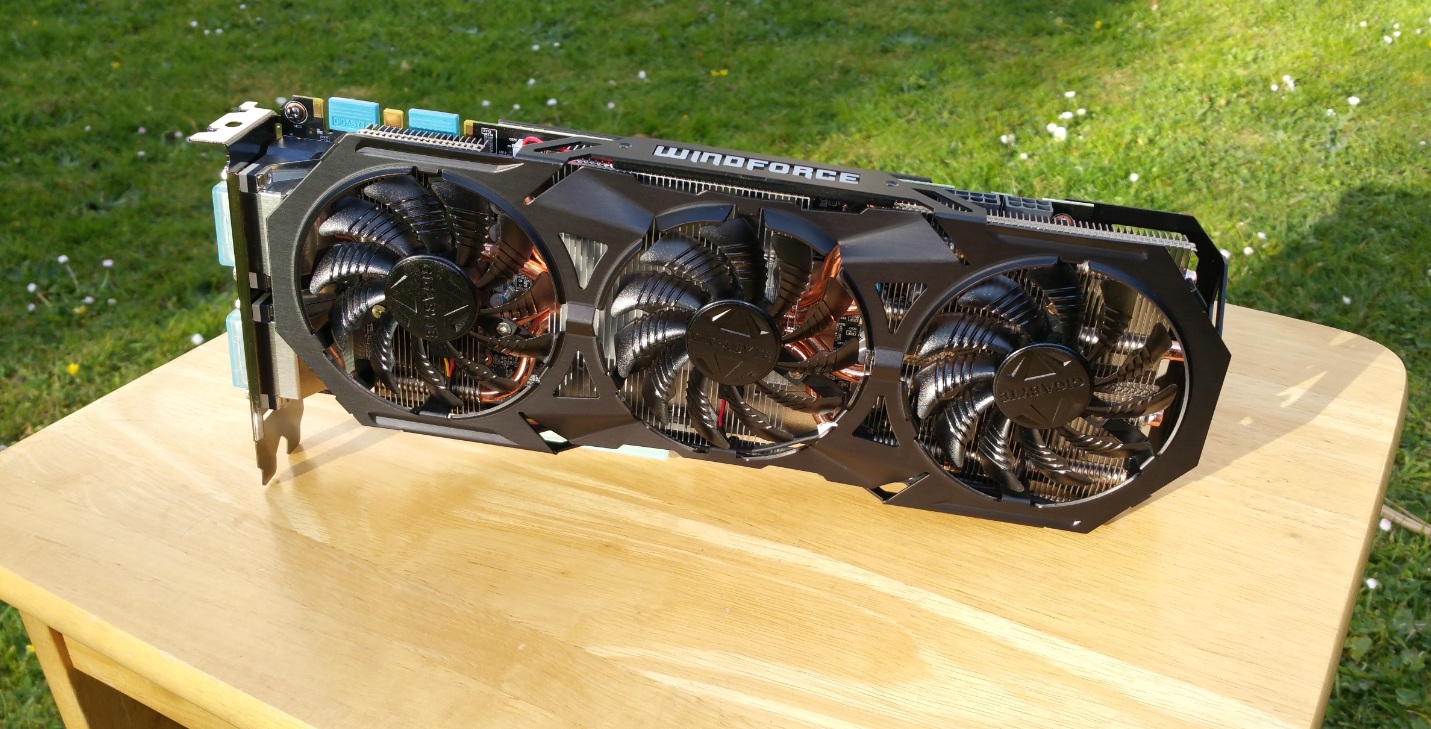

And finally the card itself, one of the longest cards on the market, at 312 mm ! (and W=129mm, H=43mm)

Gigabyte outdone themselves with this WINDFORCE triple fan cooling, rated at 600W !!!

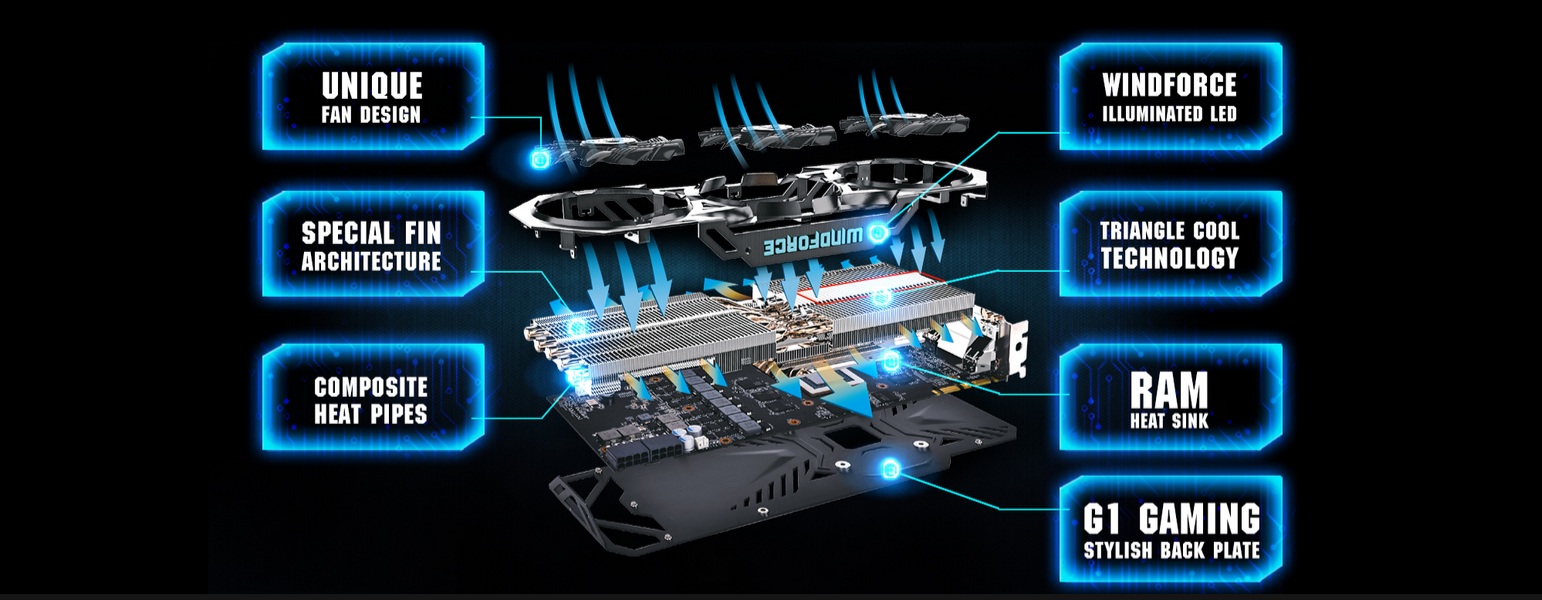

The cooling capacity of the Gigabyte GTX 970 G1 Gaming Edition is reinforced with WINDFORCE 600W cooling system by only using 2 slot heights. The WINDFORCE 600W cooling system features 5 x 8mm and 1 x 6mm composite heat-pipes, these high-performance heat pipes strengthen the speed of heat dissipation. Special fin architecture and a unique fan blade design featuring GIGABYTE’s patented Triangle Cool technology which delivers upto 35% more cooling performance than traditional fin modules. This not only provides an effective heat dissipation capacity but also enables higher performance due to the lower temperatures achieved.

The backplate is amazing with almost perfect finish.

On the top, we have the SLI connectors, the illuminated LED logo and the 1x 6 pin and 1x 8 pin power connectors.

In the back-end we have 6 ! connectors:

– 3x Display Ports 1.2

– 1x HDMI 2.0 @ 4K/60fps

– 1x DVI-D

– 1x DVD-I

And these are the possible combinations:

So far we are very impressed with this video card !

Testing methodology

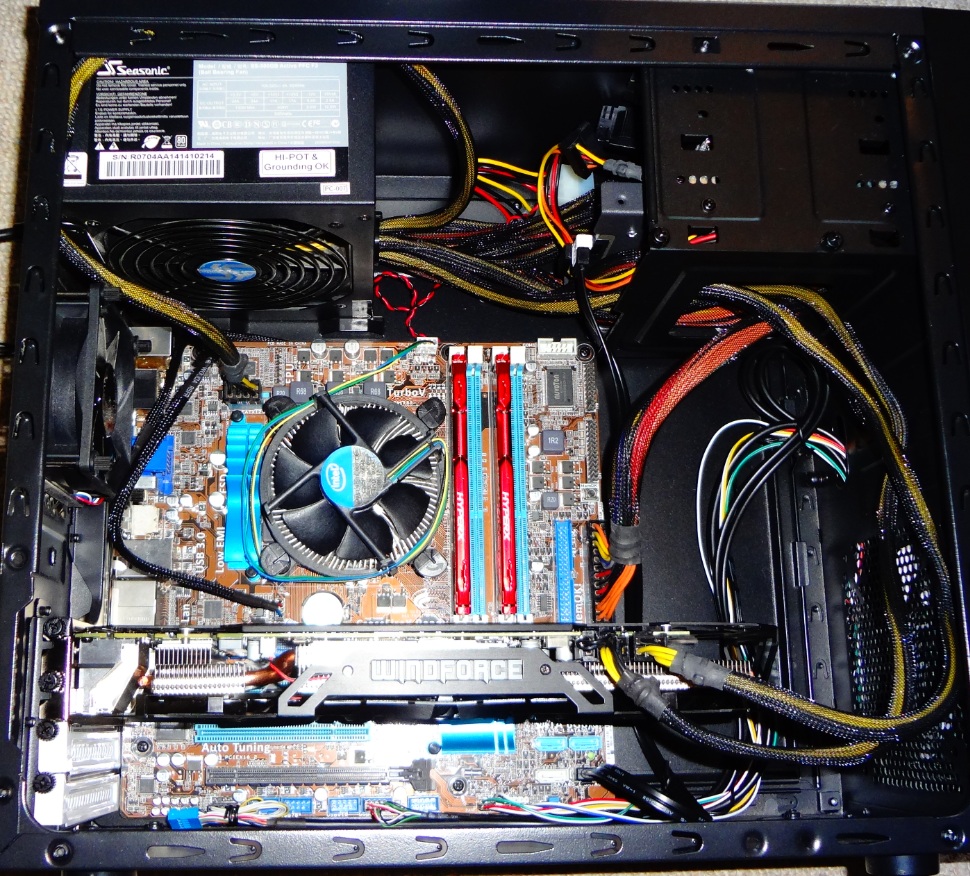

Hardware used:

Processor: Intel i7 2600k @ 3.4 GHz – Sandy Bridge 32nm, HT and Turbo Boost Enabled up to 3.8 GHz

CPU cooler: Stock Intel

Motherboard: Asus P8H67-M PRO

RAM: 2 x 4 GB Kingston HyperX Fury 1866 Mhz

SSD : Crucial BX100 250 GB

Case: Fractal Design Core 1000

Case Fans: 1x 120 mm Noiseblocker PL-PS PWM

PSU: SeaSonic S12II 500W 34A

Display: Samsung 32″ FHD TV LED 100 Hz – UE32F5000

Sound Meter: Pyle PSPL01 – positioned 1 meter from the case, (we will include +/- 2-3 % margin for error) with ambient noise level of 25-30 dB/A

Power consumption: measured via our wall monitor adapter: Prodigit 2000MU

The card installed:

Ah and let’s not forget the eye candy.

Software

Windows 7 Ultimate x64 SP1

NVIDIA GeForce 350.12 WHQL

MSI Afterburner 3.00.2384 – to record the FPS and video load/temperatures with a room temperature of 20 degrees C.

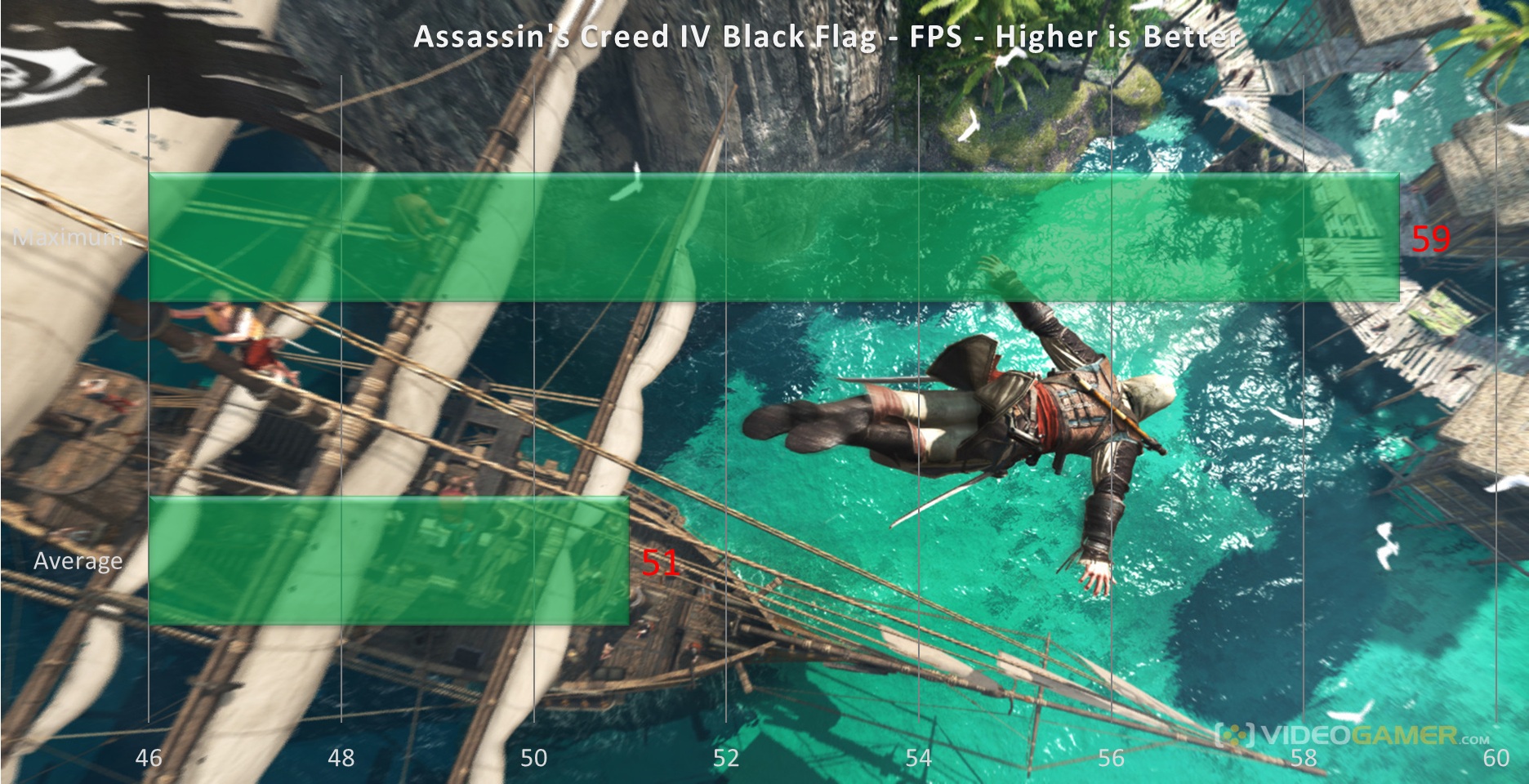

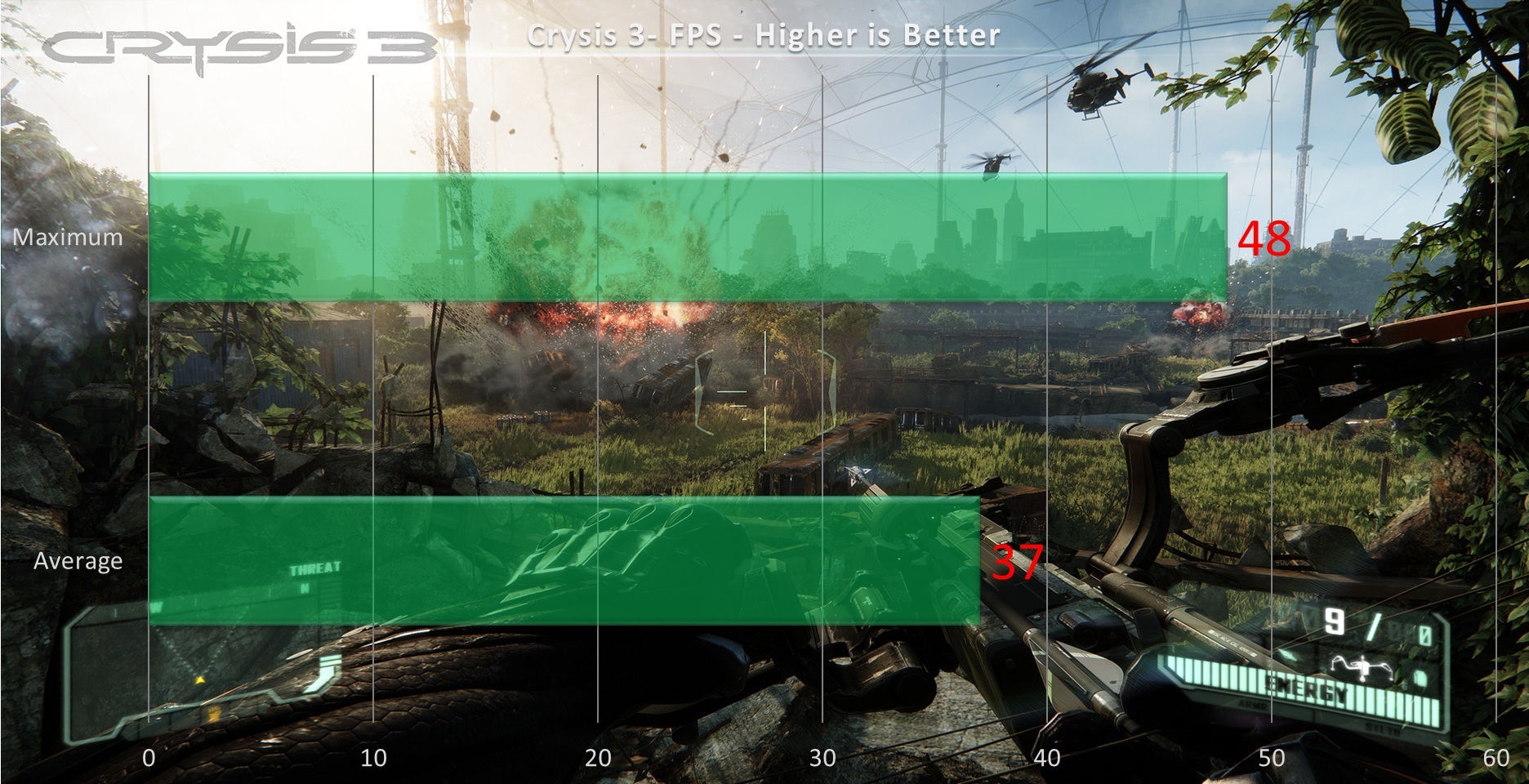

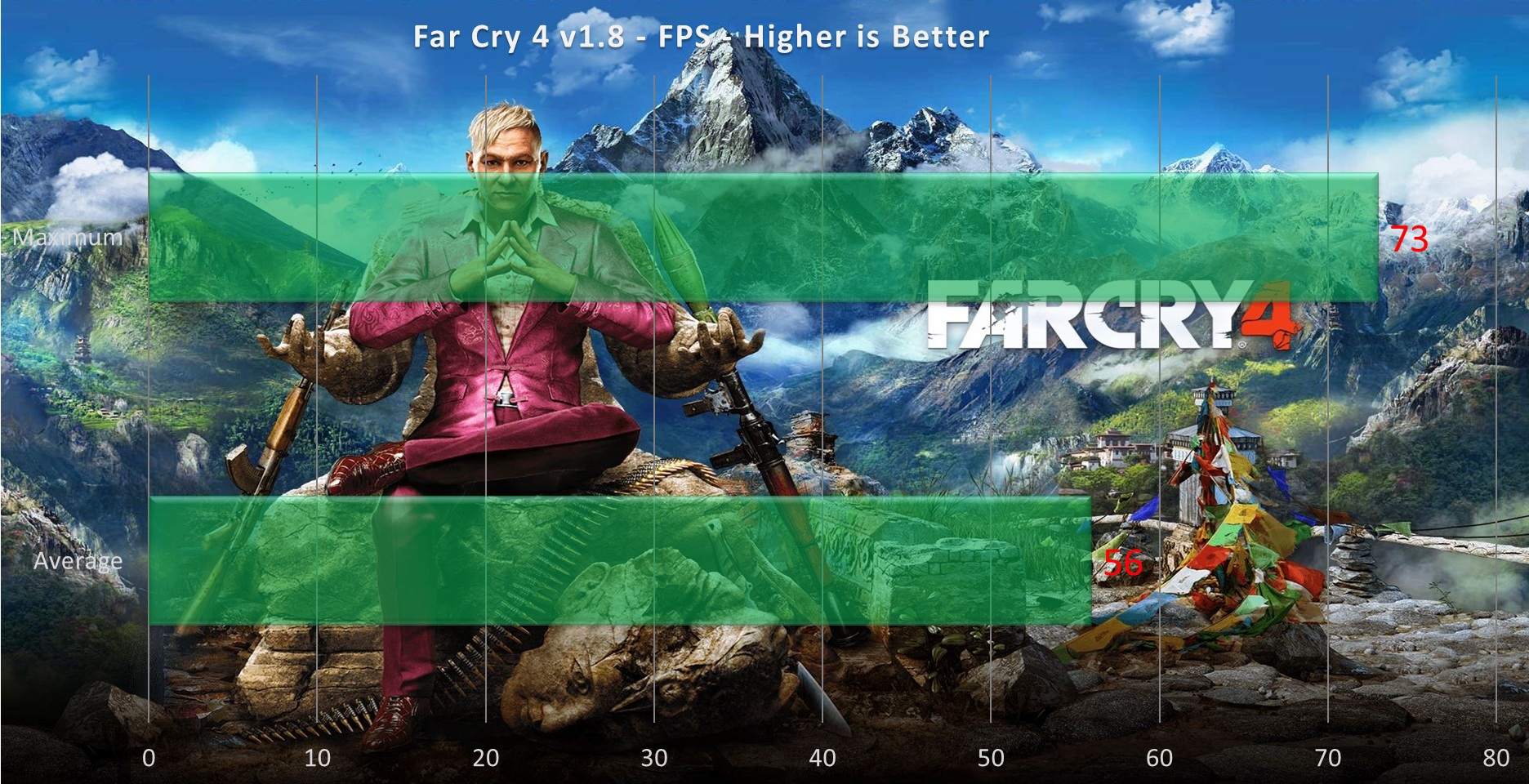

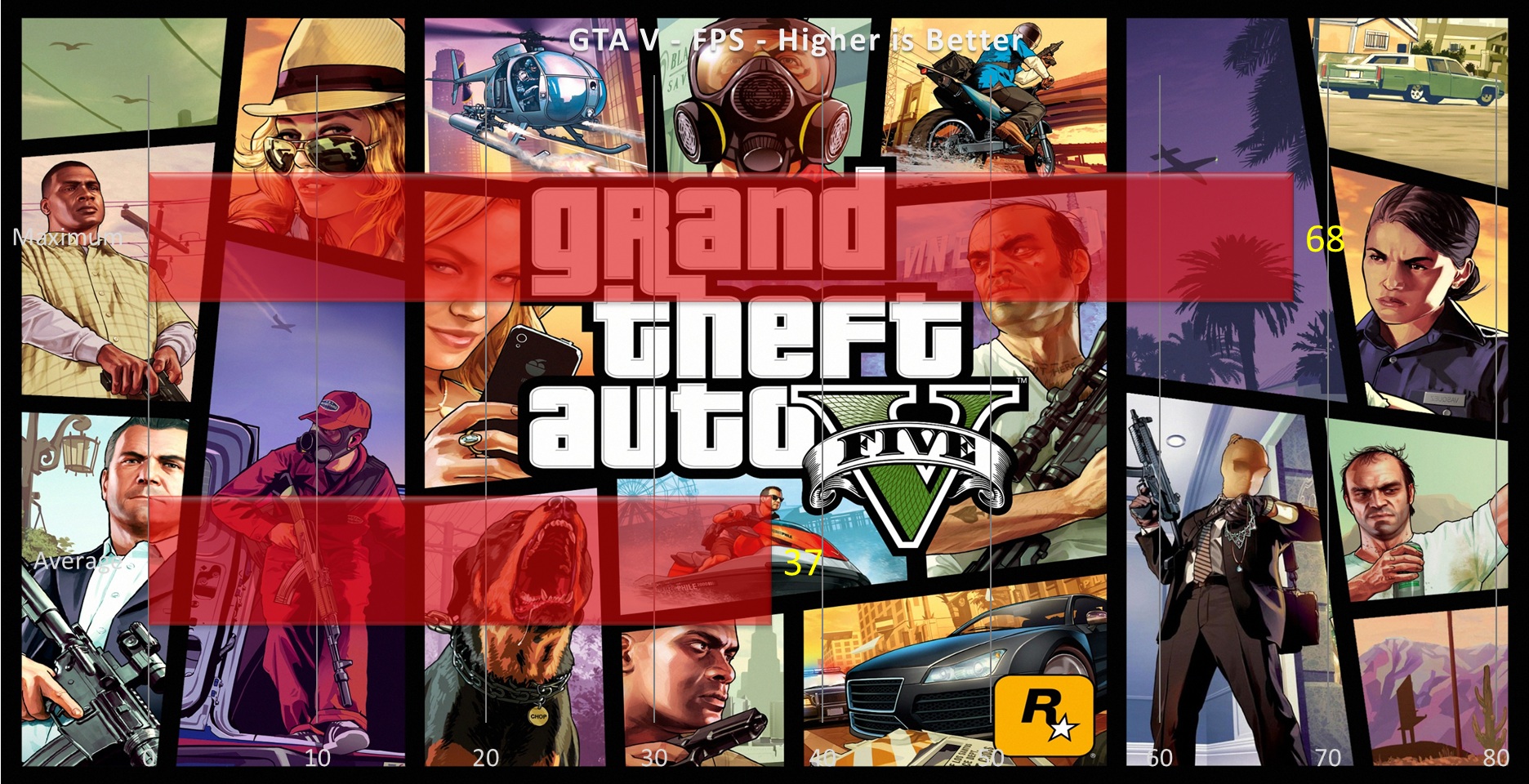

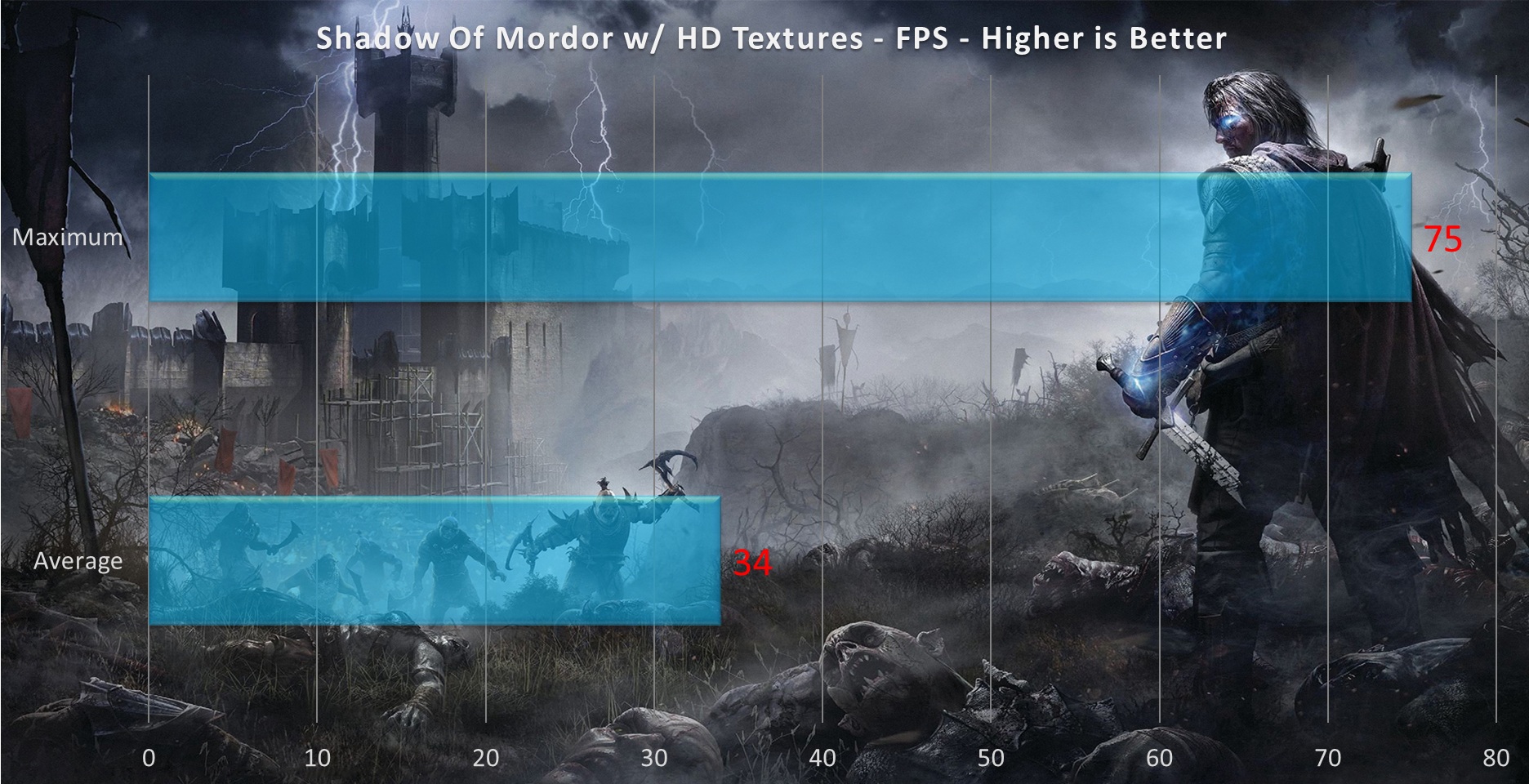

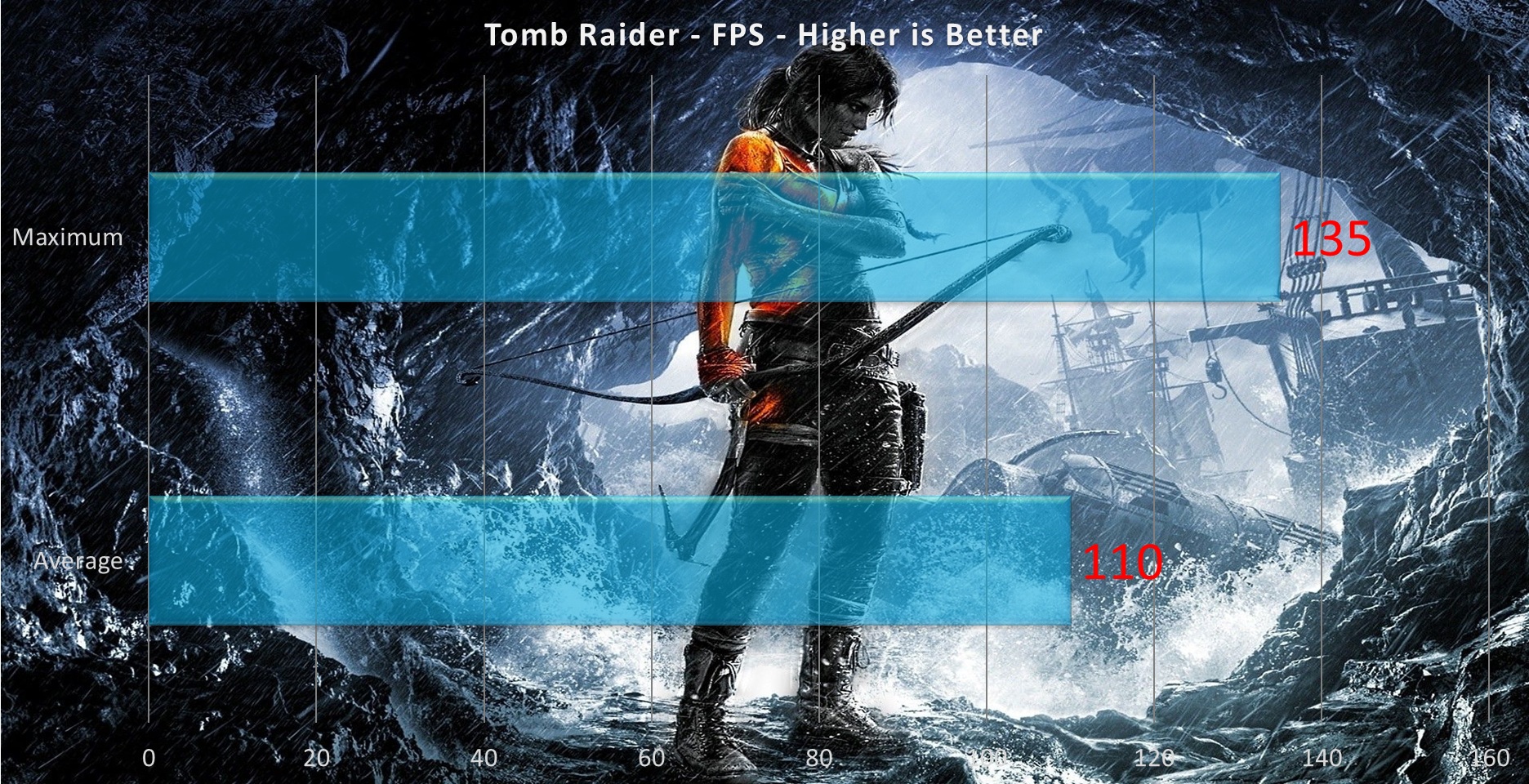

All games set at 1920 x 1080 resolution with maximum settings applied.

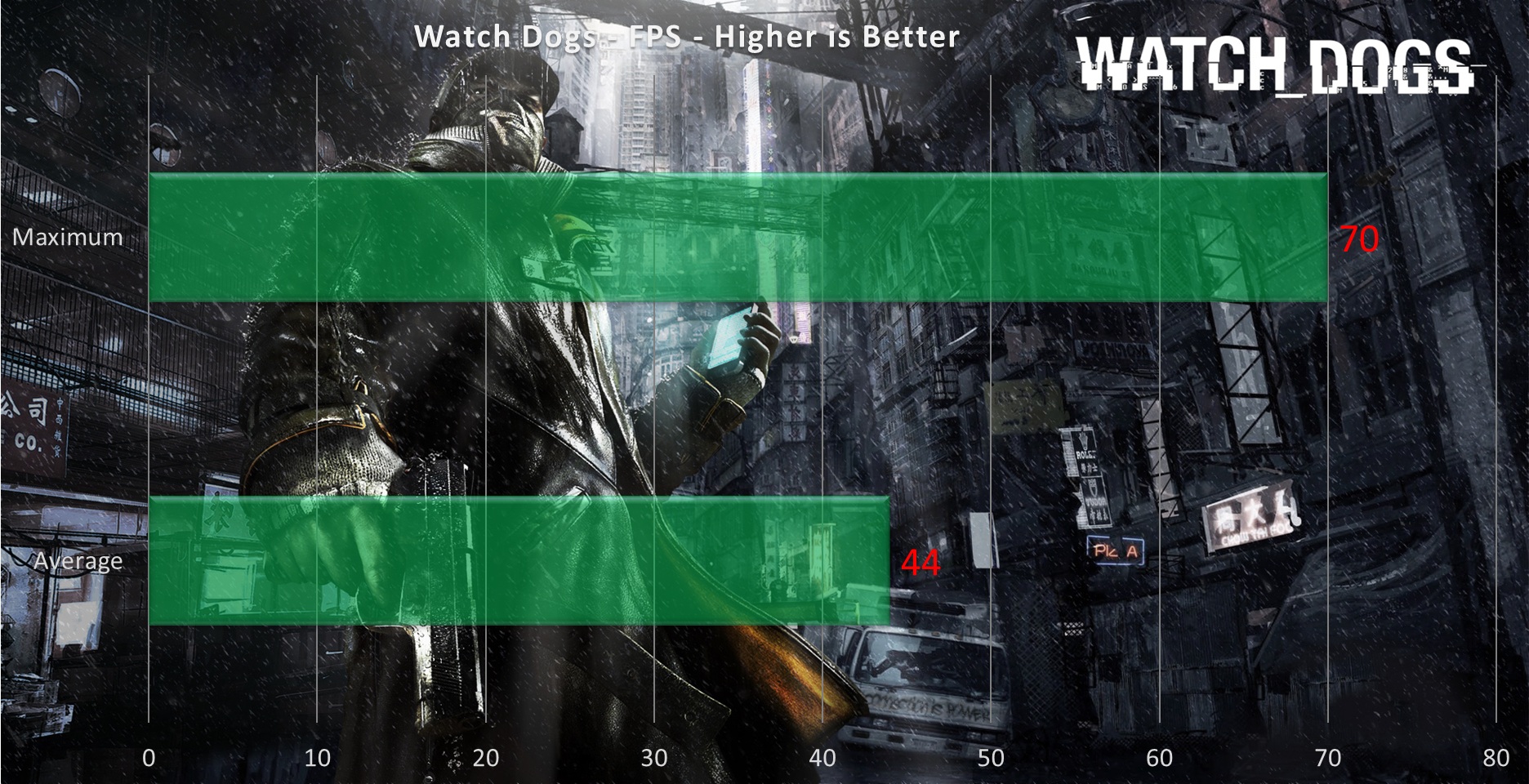

Results

The numbers speak for themselves.

Again, these are MAXIMUM settings applied including the high demanding AA and special ones in all the games. The best thing about sbobet88 is you can play it even on your phone.

Temperatures

Desktop idle / fans @ auto % rpm : 27 degrees C

Full gaming load @ Crysis 3 @ auto % rpm : 58 degrees C

Madness i tell you !

Noise output

At 1950 rpm in full load gaming in Crysis 3, nothing, we are talking about room ambient noise ~ 32 dB/A so maximum points.

Power consumption

System in IDLE = 75 Watts

System Wattage with GPU in FULL Stress = 283 Watts

Superb numbers all around !

Conclusion

What more can someone ask ?!

The good:

+ A lot of performance, we are talking about beating the GTX 780 and Titan.

+ The quietest card we have ever tested !

+ And the lowest recorded temperature we have ever seen a card perform in full gaming load !

+ One of the best OC from factory at 1329 MHz

+ Excellent design

+ Maximum build quality

+ Maximum display options

+ No coil noise (at least ours)

+ With such and overkill cooling performance and very good built quality, this means maximum overclock potential.

+ Low TDP and power consumption

The bad:

– Very long ! Small case builders should be careful.

– The 3.5 GB / 0.5 fiasco, but that’s NVIDIA’s fault, not Gigabyte’s.

No way this isn’t getting our maximum award because it is that awesome !