With the GTX 1080Ti finally around the corner – NVIDIA just posted a countdown timer on their website and at the time of the review, still 3 days to go – let’s see what the nr. 2 GPU in their hierarchy can still do.

Everybody knows who NVIDIA is:

From gaming to AI Computing

They state that the GPU has proven to be unbelievably effective at solving some of the most complex problems in computer science. It started out as an engine for simulating human imagination, conjuring up the amazing virtual worlds of video games and Hollywood films.

Today, NVIDIA’s GPU simulates human intelligence, running deep learning algorithms and acting as the brain of computers, robots, and self-driving cars that can perceive and understand the world.

NVIDIA is increasingly known as “the AI computing company.”

This is their life’s work — to amplify human imagination and intelligence.

Price when reviewed:

£ 619.99 – via Amazon.co.uk

$ 652.14 – via Amazon.com

Gigabyte GeForce GTX 1080 Founders Edition Graphic Card GV-N1080D5X-8GD-B

Watch the full video review as well, here:

Pascal, Presentation and Specs

*Courtesy of NVIDIA

Let’s see what the new Pascal architecture brings to the table:

The GeForce GTX 1000 series graphics cards are based on the latest iteration of GPU architecture called Pascal, named after the famous mathematician.

So today is all about that GP104 based video card which uses the A1 revision, a product that is to replace the GeForce GTX 980. This is the Founders Edition, the new naming scheme for the reference model.

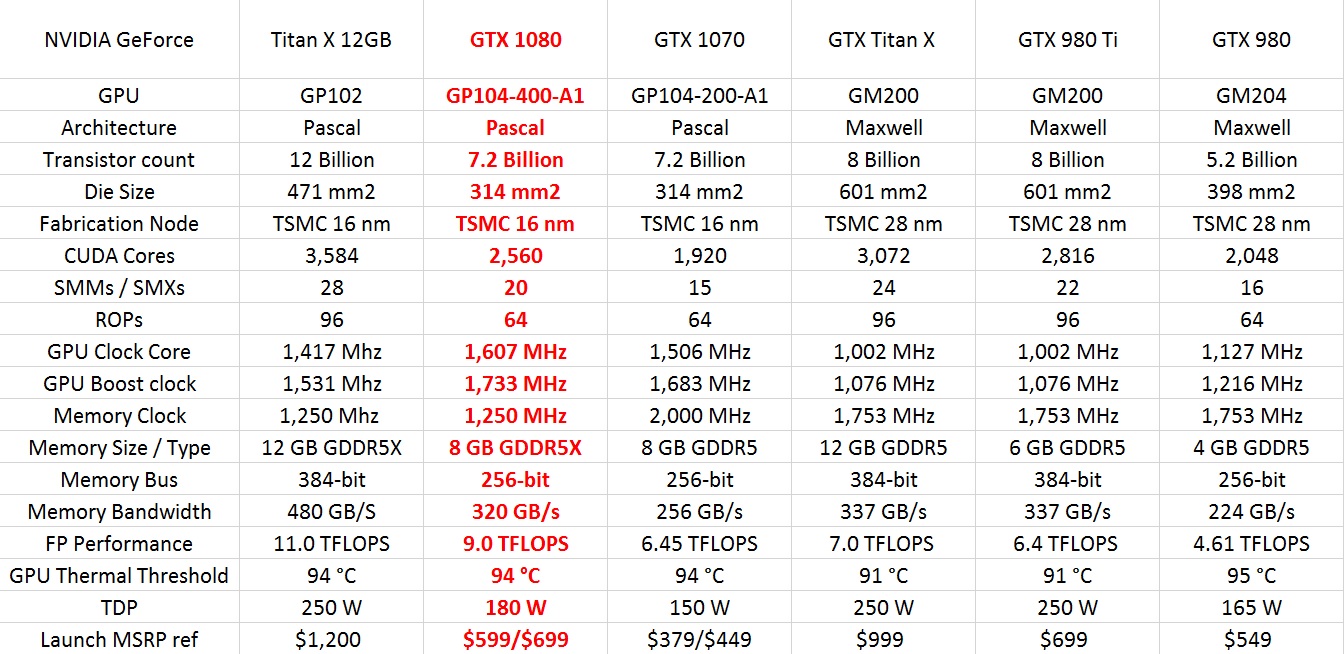

Pascal Architecture – The GeForce GTX 1080’s Pascal architecture is the most efficient GPU design ever built. Comprised of 7.2 billion transistors and including 2,560 single-precision CUDA Cores with an unprecedented focus and results in frequency of operation and energy efficiency.

16nm FinFET – The GP104 GPU is fabricated using a new 16 nm FinFET manufacturing process that allows the chip to be built with more transistors, ultimately enabling new GPU features, higher performance, and improved power efficiency.

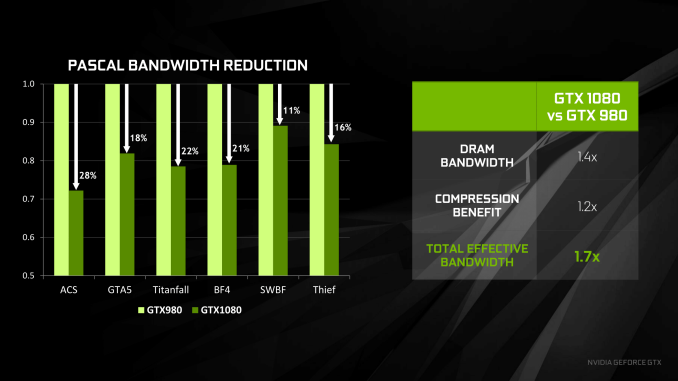

GDDR5X Memory – GDDR5X provides a significant memory bandwidth improvement over the GDDR5 memory that was used previously in NVIDIA’s flagship GeForce GTX GPUs. Running at a data rate of 10 Gbps, the GeForce GTX 1080’s 256-bit memory interface provides 43% more memory bandwidth than NVIDIA’s prior GeForce GTX 980 GPU. Combined with architectural improvements in memory compression, the total effective memory bandwidth increase compared to GTX 980 is 1.7x.

Color Compression

The GTX 1080 and the Titan X, that only ones that use GDDR5X memory, the really new good stuff at 10,010 MHz (effective). NVIDIA has a lot of tricks up its sleeve and color compression being one of them. The GPU’s compression pipeline has a number of different algorithms that intelligently determine the most efficient way to compress the data. One of the most important algorithms is delta color compression. Pascal GPUs include a significantly enhanced delta color compression capability:

– 2:1 compression has been enhanced to be effective more often

– A new 4:1 delta color compression mode has been added to cover cases where the per pixel deltas are very small and are possible to pack into ¼ of the original storage

– A new 8:1 delta color compression mode combines 4:1 constant color compression of 2×2 pixel blocks with 2:1 compression of the deltas between those blocks

Display Connectivity:

Nvidia’s Pascal generation products will receive a nice upgrade in terms of monitor connectivity. First off, the cards will get three DisplayPort connectors, one HDMI connector and a DVI connector. The days of Ultra High resolution displays are here, Nvidia is adapting to it. The HDMI connector is HDMI 2.0 revision b which enables:

– Transmission of High Dynamic Range (HDR) video

– Bandwidth up to 18 Gbps

– 4K@50/60 (2160p), which is 4 times the clarity of 1080p/60 video resolution

– Up to 32 audio channels for a multi-dimensional immersive audio experience

– DisplayPort wise compatibility has shifted upwards to DP 1.4 which provides 8.1 Gbps of bandwidth per lane and offers better color support using Display Stream Compression (DSC), a “visually lossless” form of compression that VESA says “enables up to 3:1 compression ratio.” DisplayPort 1.4 can drive 60 Hz 8K displays and 120 Hz 4K displays with HDR “deep color.” DP 1.4 also supports:

– Forward Error Correction: FEC, which overlays the DSC 1.2 transport, addresses the transport error resiliency needed for compressed video transport to external displays.

– HDR meta transport: HDR meta transport uses the “secondary data packet” transport inherent in the DisplayPort standard to provide support for the current CTA 861.3 standard, which is useful for DP to HDMI 2.0a protocol conversion, among other examples. It also offers a flexible metadata packet transport to support future dynamic HDR standards.

-Expanded audio transport: This spec extension covers capabilities such as 32 audio channels, 1536kHz sample rate, and inclusion of all known audio formats.

High Dynamic Range (HDR) Display Compatibility

High-Dynamic Range (HDR) refers to the range of luminance in an image. While trying not to get to technical, it typically means a range in luminance of 5 or more orders of magnitude. While HDR rendering has been around for over a decade, displays capable of directly reproducing HDR are just now becoming commonly available. Normal displays are considered Standard Dynamic Range and handle roughly 2-3 orders of magnitude difference in luminance.

So HDR is becoming something very interesting, especially for the movie aficionados. Think of better pixels, a wider color space, more contrast and more interesting content. These new displays will offer unrivaled color accuracy, saturation, brightness, and black depth – in short, they will come very close to simulating the real world.

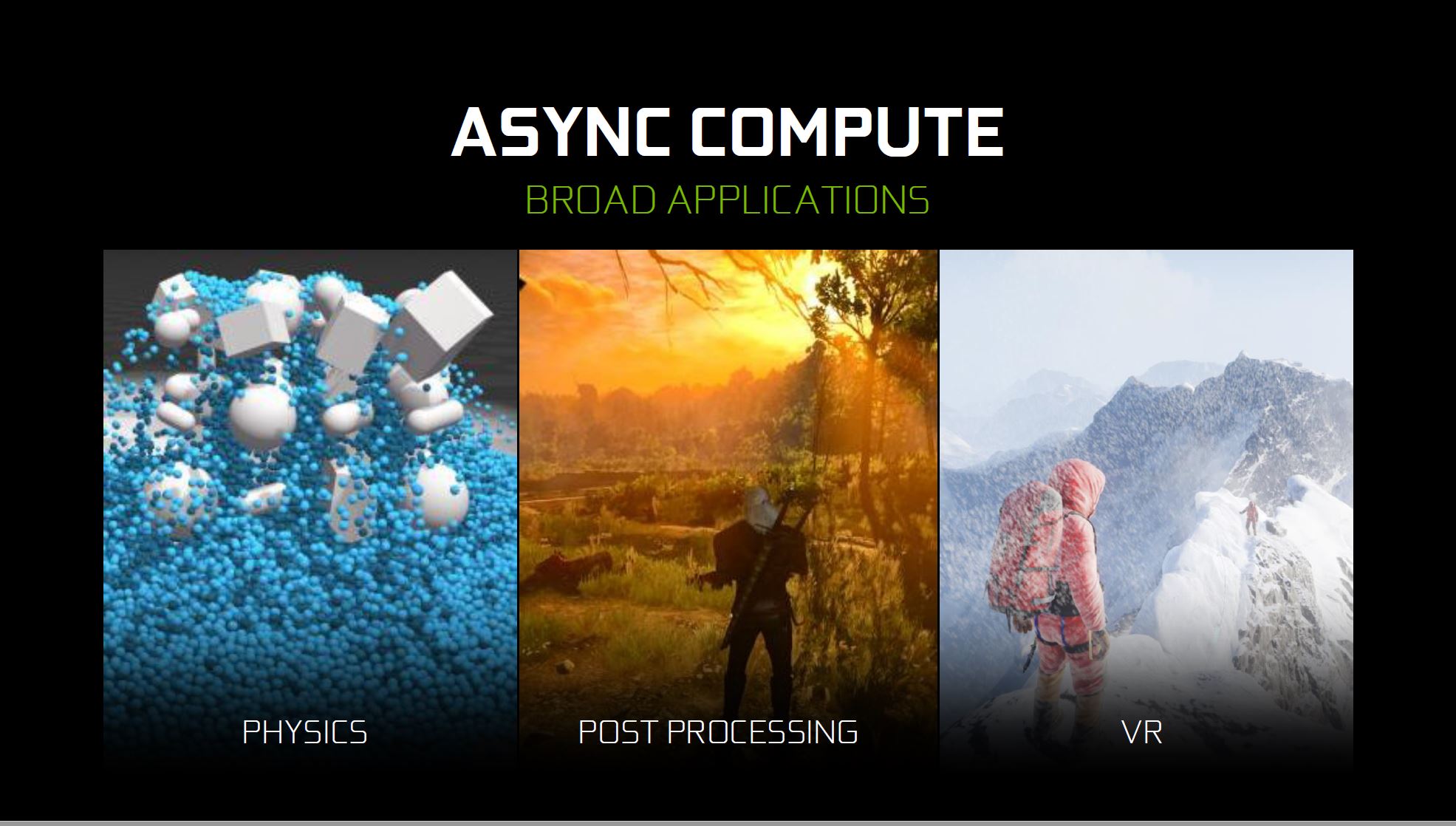

Asynchronous Compute

Modern gaming workloads are increasingly complex, with multiple independent, or “asynchronous,” workloads that ultimately work together to contribute to the final rendered image. Some examples of asynchronous compute workloads include:

– GPU-based physics and audio processing

– Postprocessing of rendered frames

– Asynchronous timewarp, a technique used in VR to regenerate a final frame based on head position just before display scanout, interrupting the rendering of the next frame to do so

These asynchronous workloads dictate a new scenario for the GPU architecture which involves overlapping workloads. Some types of workloads do not activate the GPU completely by themselves. In these cases there is a performance opportunity to run two workloads at the same time, sharing the GPU and running more efficiently.

Here, Pascal introduces support for “dynamic load balancing.” In Maxwell generation GPUs, overlapping workloads were implemented with static partitioning of the GPU into two subsets, one that runs graphics, and one that runs compute. But, if the compute workload takes longer than the graphics workload, and both need to complete before new work can be done, and the portion of the GPU configured to run graphics will go idle. This can cause reduced performance that may exceed any performance benefit that would have been provided from running the workloads overlapped. Hardware dynamic load balancing addresses this issue by allowing either workload to fill the rest of the machine if idle resources are available.

GPU Boost 3.0

NVIDIA started with the Keplar generation to fine-grained voltage points, which defines a series of GPU voltages and their respective clockspeeds. The GPU then operates at points along the resulting curve, changing clockspeeds based on which voltage it’s at and what the environmental conditions are.

With Pascal, the individual voltage points are programmable, and it is now possible to adjust the clockspeed of Pascal GPUs at each voltage point, a much greater level of control than before. But for this to work perfect the results are very close related and depend on the temperature. This means it will widely vary if it perceives inadequate conditions and will down-clock accordingly much more aggressive than previous generations.

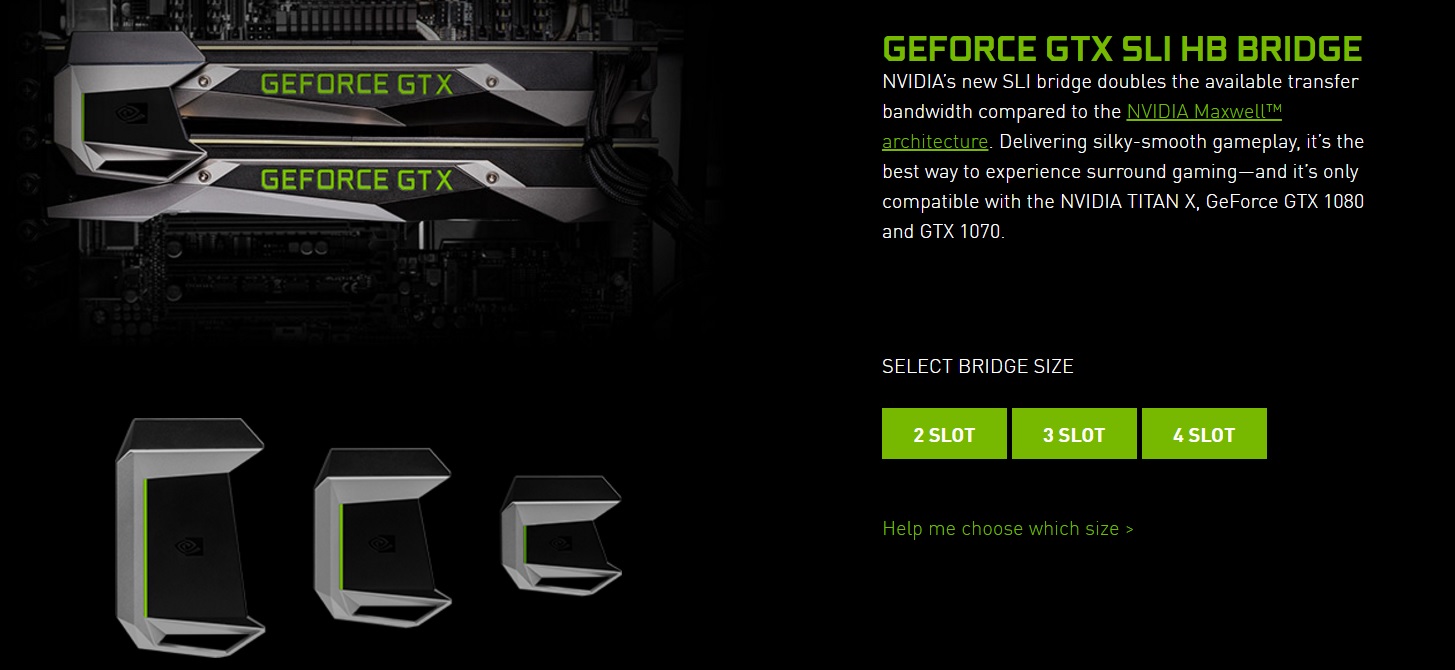

Updates to the SLI feature

New generation of HB (High bandwidth) SLI bridges that doubles the available transfer bandwidth compared to the previous Maxwell architecture.

And here are the specs:

The rectangular die of the GP104, made by the Taiwan Semiconductor Manufacturing Company (TSMC), measures 314 mm² BGA package which houses a transistor-count of well over 7 billion, up from 3.5 billion on GK104 and the 5.2 billion of the more unusual GM204.

One of the main stars here is the 180 W TDP that indicated the highly efficient design thus the presence of only one 8 pin PCI-E power connector.

Then the 8 GB of VRAM, ample number for even the most demanding games that the future will bring despite any resolution. As stated before the new GDDR5X is another new tech implementation that promises besides the better data and bandwidth, even more overclocking capability.

Packaging & Visual inspection

The GTX 1080 Founders Edition is somewhat of a departure from the norm for NVIDIA. Their actual construction isn’t too significantly changed, but NVIDIA has changed how their reference cards are positioned.

In previous generations the reference cards were the baseline and board partners could either build higher end cards to sell at higher prices, or build cheaper cards and sell them near the MSRP.

However with the 10 series, the reference cards have become a higher end options, selling for anywhere between $50 and $100 higher than NVIDIA’s baseline MSRP.

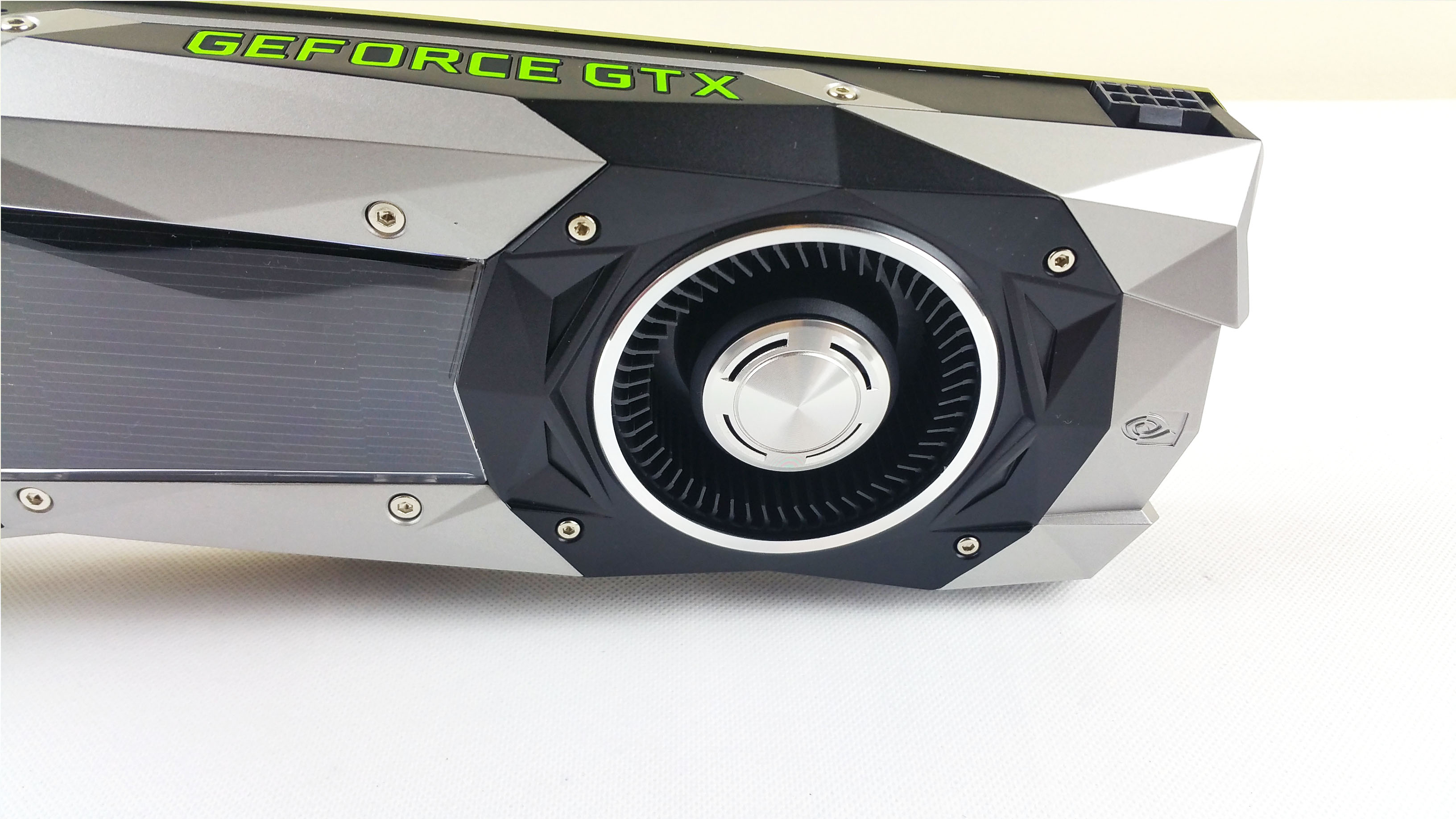

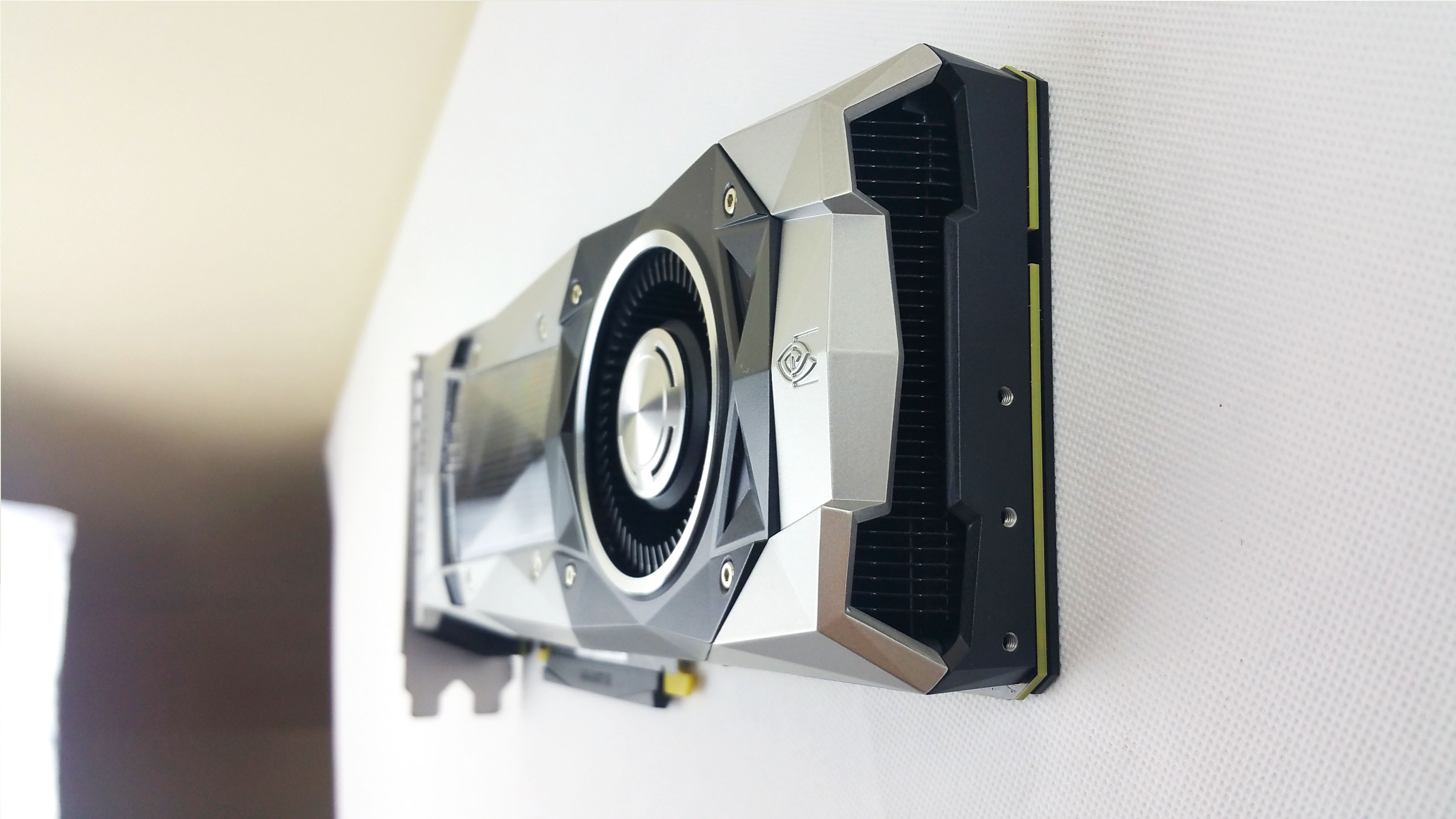

Plus with a redesigned outer shell, with more angular and sharp edges reminiscent of futuristic fighter jets.

Still the overall size of the card is unchanged from past reference designs and at 10.5” / 266.7 mm long, these cards will fit in the same amount of space occupied by past NVIDIA high-end reference cards. The blower design in NVIDIA’s vision provides a nice balance between cooling and acoustics and it’s compatible with virtually every case out there, as there’s no need to rely on chassis cooling to handle the heat from the card.

So, here we have a board partner, Gigabyte who gives us their new Illuminati-cyborg-eye design on the front of the box. Very cool and aggressive looking perspective.

On the back we have a quick mention for some of the main technologies like the Virtual Reality and G-Sync.

The top and bottom sides have the brand name and model.

And the other lateral sides the classic NVIDIA green stickers and other codes like the serial number etc.

Inside we have the standard packaging from Gigabyte, an all black box. The protection comes from the foam and an anti-static bag. Accessoris are the boring stuff like a CD with drivers and the user’s manual.

And here she is.

This is the new cooler design as previously stated. As with the Titan X, they look really good.

Finally a backplate is present. Not for thermal reasons but for more PCB protection.

Here is a size reference compared to a MSI GTX 770 4G.

Top side, besides the SLI pins, illuminated green GeForce GTX logo, we also notice only one 8 pin PCI-E power connector.

Let’s continue with the display connectivity. It has:

– 3x DisplayPorts at DP 1.4 standard – which is 1.2 certified and 1.3/1.4 ready.

– 1x HDMI 2.0b port

– 1x Dual Link DVI port

And get this, it supports up to 7680 x 4320 @ 60 Hz maximum resolution ! (To mention that for this to work it will need dual DisplayPort connectors or one DisplayPort 1.3 connector)

The GTX 1080 supports up to four display simultaneously. More technical info such as the display pipeline supports HDR gaming, as well as video encoding and decoding. New to Pascal is HDR Video (4K@60 10/12b HEVC Decode), HDR Record/Stream (4K@60 10b HEVC Encode), and HDR Interface Support (DP 1.4).

The fan blower is accompanied by a copper heatsink on the inside.

The air is sucked from the front and from the blower’s side and exhaust through the back.

Standard cooling principle but NVIDIA uses it for maximum case and air cooling compatibility even if there is no extra case fans present.

These being said and witnessed, let’s install it and have some fun with it.

Testing methodology

All games and synthetic benchmarks are set first at 1920 x 1080 resolution with maximum settings applied, V-Sync Off / any changes will be pointed out. Then at 2K/2560x1440p and 4K, no anti-alising, no V-Sync. Margin of error is within 2-4 %.

Sound Meter: Pyle PSPL01 – positioned 1 meter from the case, (we will include +/- 2-3 % margin for error) with ambient noise level of 25-30 dB/A

Power consumption: measured via our wall monitor adapter: Prodigit 2000MU

Hardware used:

Processor: Intel i7 6600k @ 4.5 ghz – 1.280v

CPU Cooler: Noctua NH-L9i

Case: NCase M1 V5.0 mITX

SSD: Samsung 850 Evo 250 GB

HDD: WD Scorpio Blue 500 GB

Motherboard: ASUS ROG Maximus Impact VIII Z170 mITX

RAM: 16 GB (2×8) Corsair Vengenace LPX DDR4 2400 Mhz C14

PSU: Corsair SF600W SFX

Cable Extensions: Corsair Premium Extension Kit – Black

Displays:

–Samsung 32″ FHD TV LED 100 Hz – UE32F5000

–LG 55″ Ultra HD 2K/4K TV – 55UC970V

Other video cards – all at factory/stock settings:

– NVIDIA GTX TITAN X Pascal 12G

– MSI GTX 980ti TF V 6G

– Gigabyte GTX 970 G1 4GB

The card installed:

And as accustomed by NVIDIA, the logo lights up as well – in green – what else?

Software

– Windows 10 Pro x64 Build 1607

– NVIDIA GeForce WHQL 375.63

– GPU-Z v1.9.0

– CPU-Z v1.72.1

– MSI Afterburner v4.11 – To OC and to record the FPS and load/temperatures (a room temperature of 20 degrees C)

– Valley Benchmark v1.0

Results

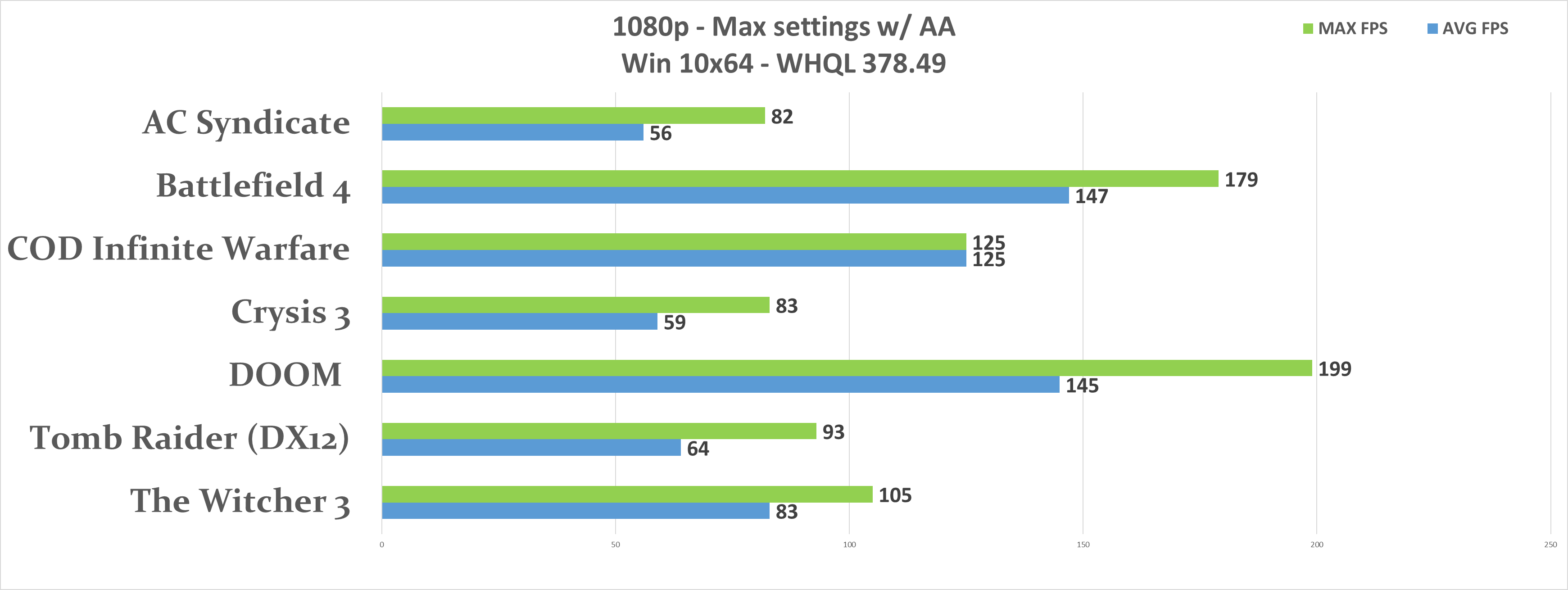

Here are the quick numbers as by itself.

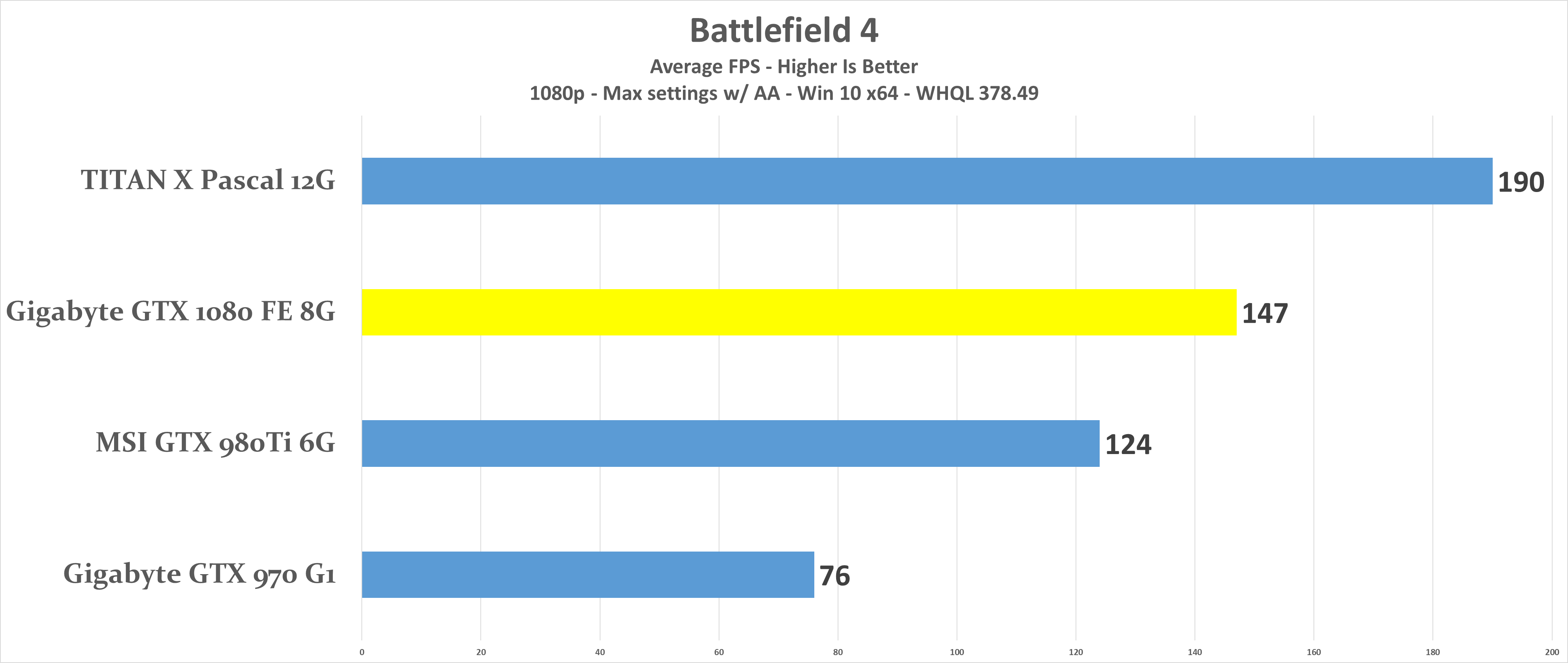

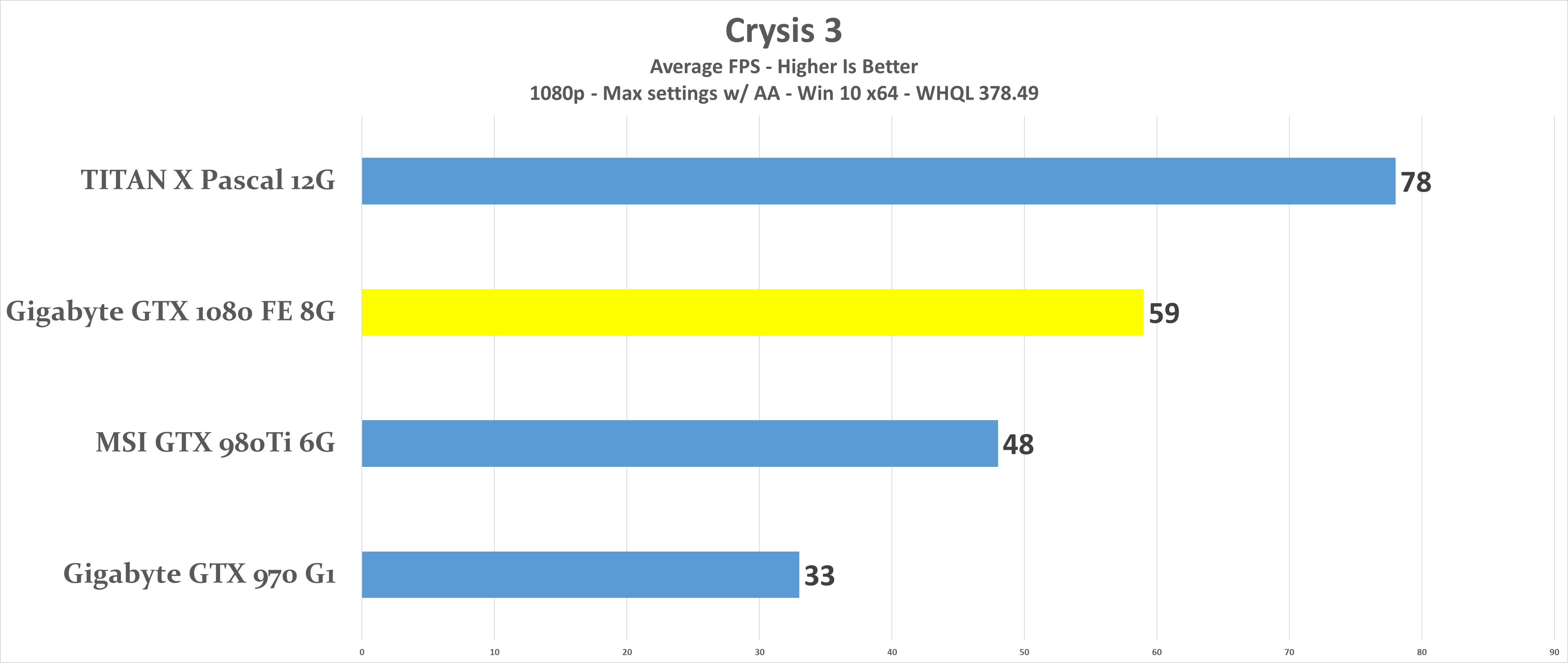

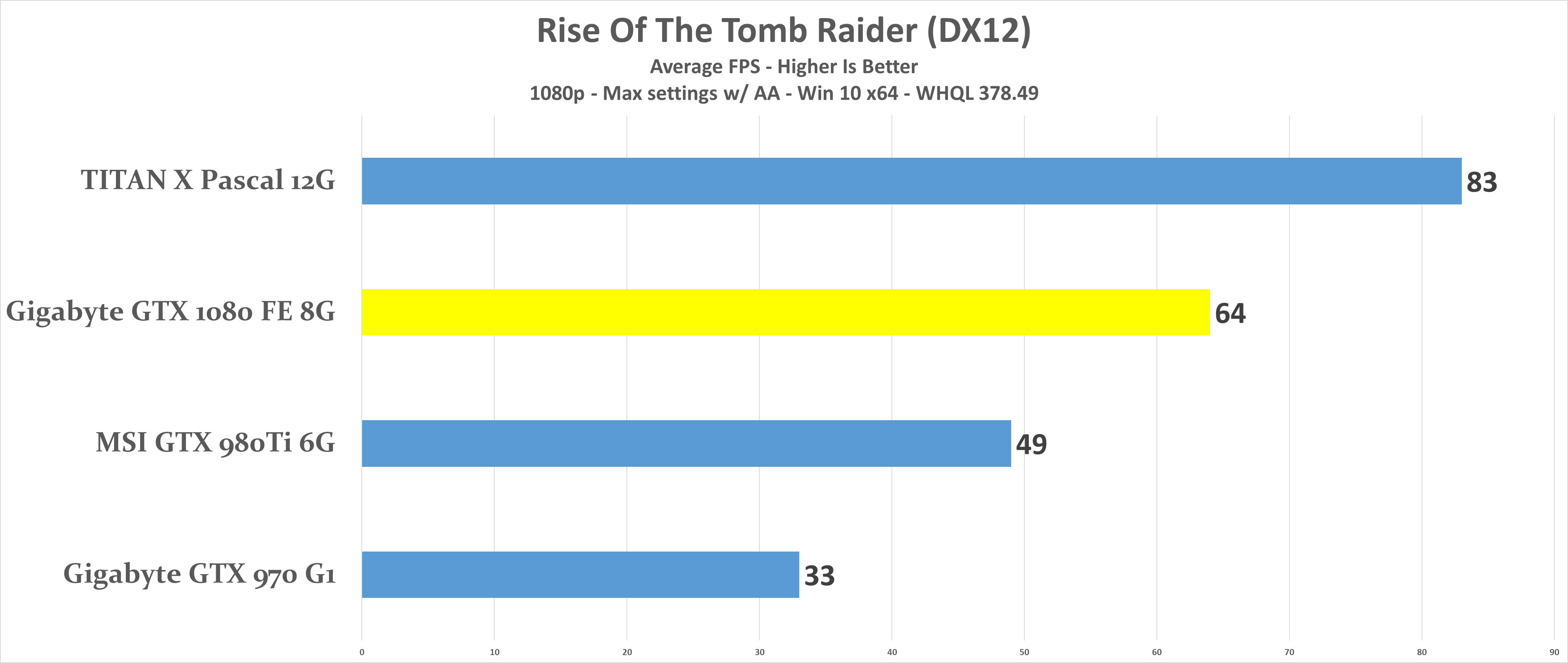

FHD 1080p

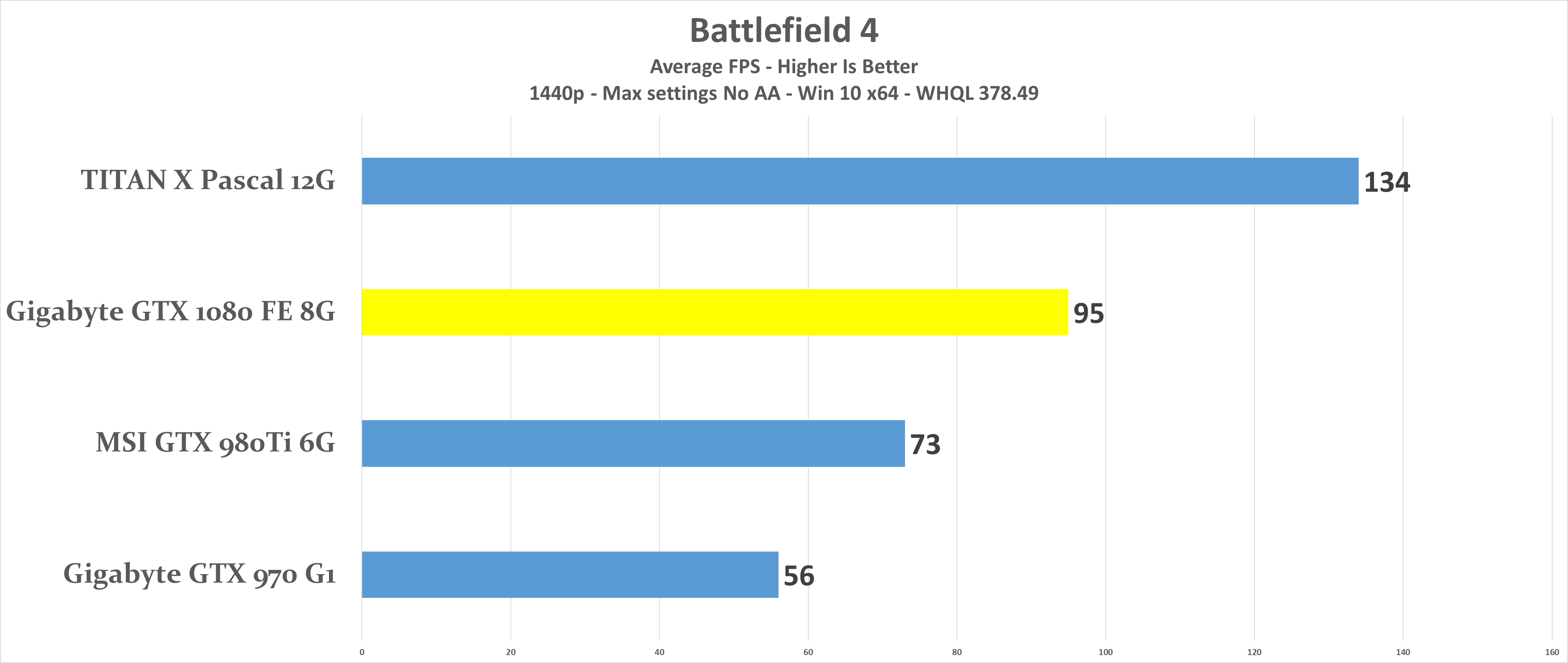

2K/1440p

ULTRA HD 4K

And now compared to the others video cards:

In 1920×1080 we have:

At 2560×1440 we get:

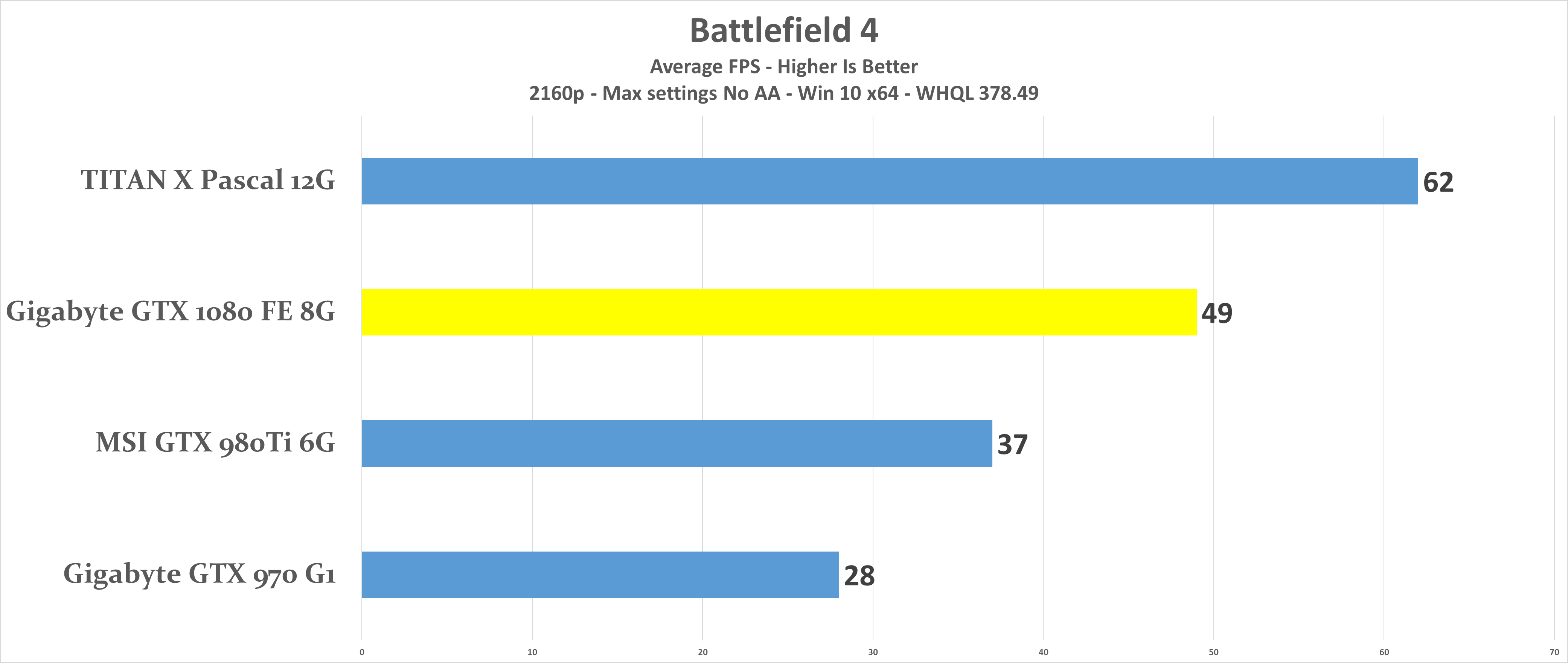

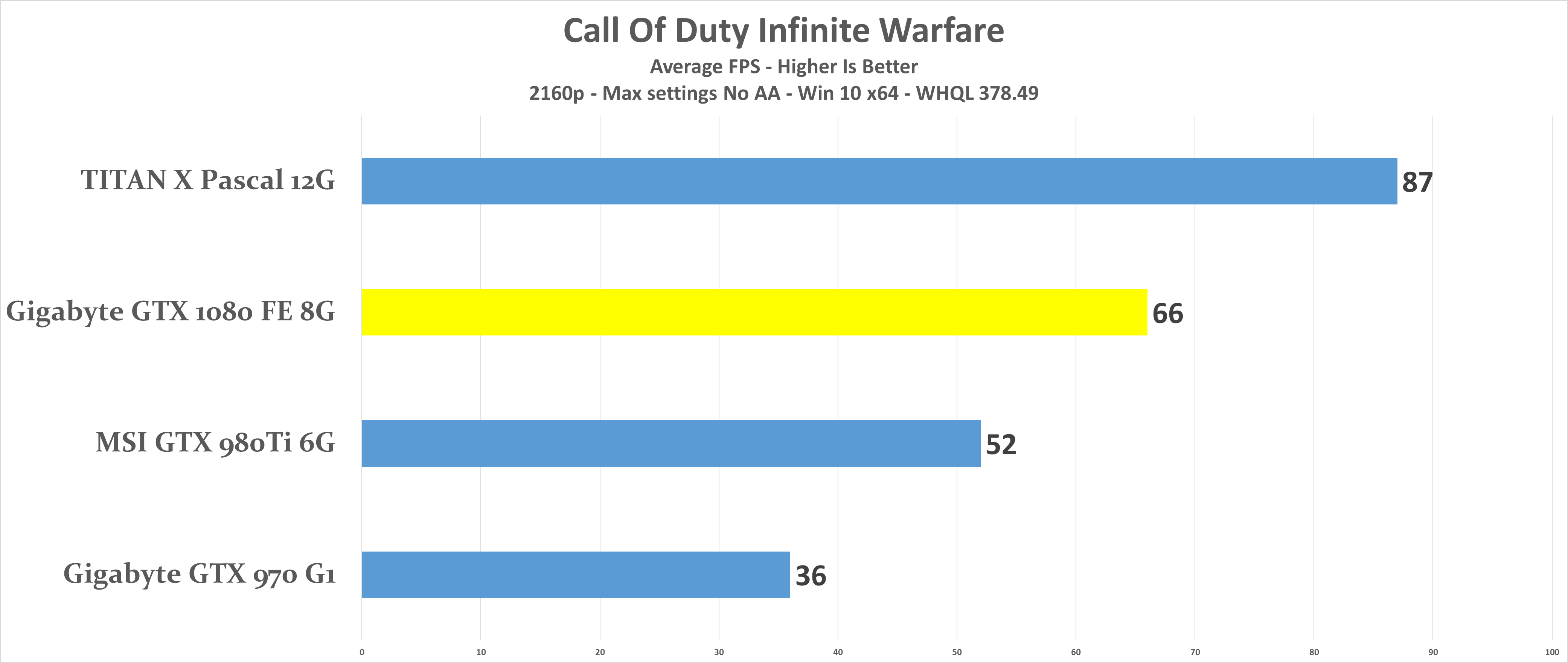

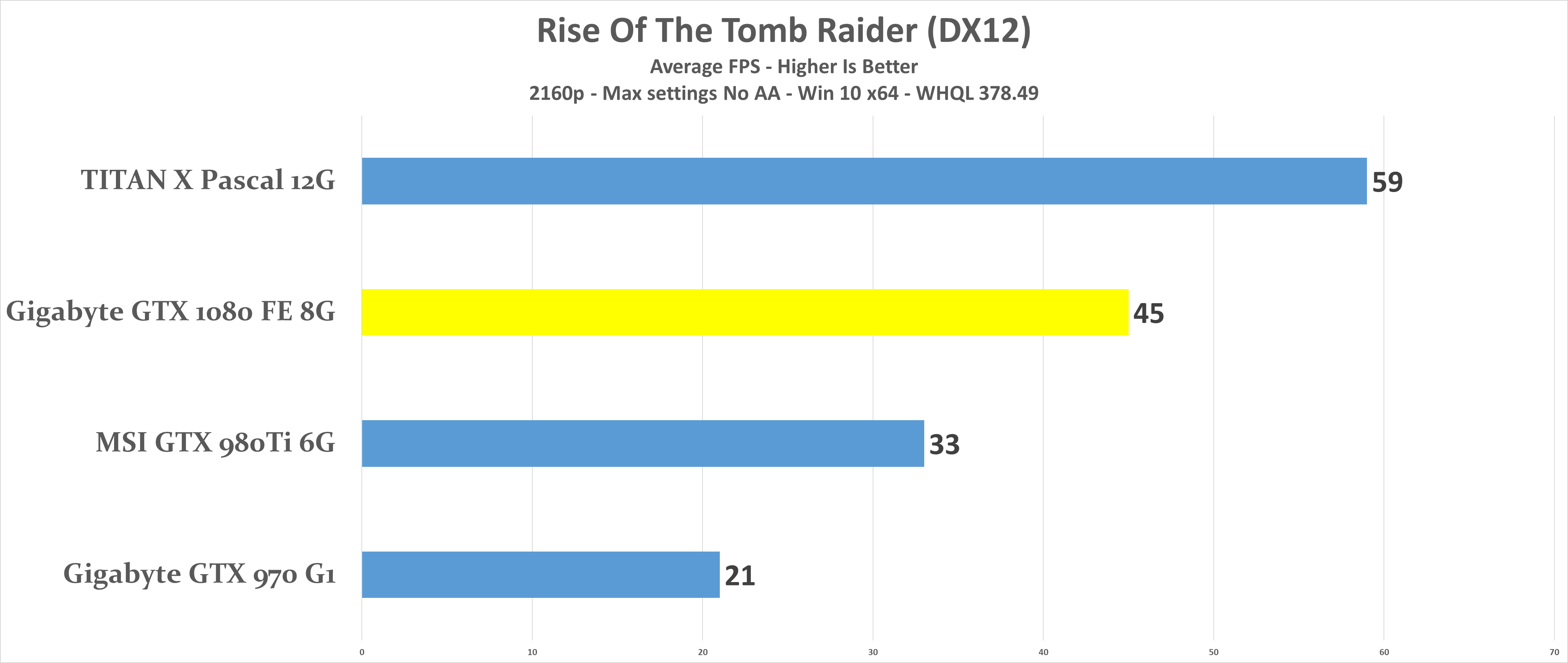

And in 3840×2160 we see:

Temperatures

Noise output

Power consumption

* Idle = under 10 W

* One run of Valley Benchmark @ 1080p 8xAA Extreme HD DX11 = 179 W

Overclocking

* We recommand to start in increments of 50 Mhz to the core and run a benchmark to test the stability and increase or decrease by 25 Mhz until the desired maximum stable value is discovered.

* Here are our best settings so far given the limitations of the stock air cooler, thus we did not tinker with the voltage:

– Power limit: 120 %

– Temp limit: 92°C

– Core clock: +200 Mhz

– Memory Clocks: +500 Mhz

– Fan speed: 60 %

Let’s centralize our findings:

– The boost went from 1733 Mhz to 1933 Mhz (+ 11.54 % increase)

– The memory bandwidth reached 352.3 GB/s from 320.3 GB/s (+ 10.99 % increase)

– In 4K gaming we saw the AVG FPS reaching an overall increase of + 9-10 %.

– In all of our games it was always he 2nd position confirming the 10 series hierarchy. Because the GTX 980ti is basically a little faster than a Titan X (Maxwell) which in turn equals the GTX 1070, give or take +/- 5%.

Conclusion

The new 16nm FinFET node proves its ground with high efficient and high capability to overclock. Minus the price increase and mediocre cooling, there is no denying the great performance, thus making this is one hell of card.

The good:

+ The 2nd most powerful GPU (until the 1080ti will appear)

+ 8 GB VRAM of GDDR5X

+ New cooling with a futuristic/stealth design

+ Highly efficient

+ New NVIDIA technologies like Ansel, FastSync, HEVC Video, and VR

+ The new TSMC’s 16 nm FinFET fabrication node

+ Really easy to overclock and +2.xx GHz on the boost is possible as long that cooling is sufficient

The bad:

– The fans don’t turn off in idle

– Not worth the price premium NVIDIA has employed

– Mediocre cooling on auto % fan curve and gets noisy after 60 %

– Needs really good cooling to keep the performance constant