We want to introduce a new type of reviews, more like a retrospective, to see how much we have come (or not) and to revisit some of the most popular, eccentric and memorable products (from a good or bad perspective) from the IT world.

So, with a hint of melancholy, today we would like to talk about, arguably one of the best and most important graphics cards ever released, the mighty 8800 GTX from NVidia.

*27.04.2017 update:

Here is the full video review:

And here are the updated tests in SLI!

It was release on the 8th of November, 2006.

That’s exactly 8 years ago, on the day !

That’s light ears in the IT world.

At the time it was obliviously the most expensive graphic solution (599 $) and the most powerful, the G80 being the largest commercial GPU ever constructed.

It consists of 681 million transistors covering a behemoth 480 mm² die surface area built on a 90 nm process.

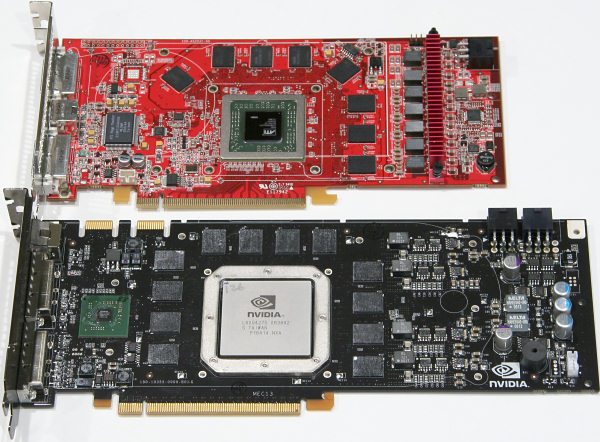

Just look at the size of that thing !

Here versus an ATI x1900.

But contradictory with the high end/enthusiast products, who would have guest that this one it will also endure the test of time.

Those who paid the price for it brand new and kept it, have gotten fantastic value for money out of their investment.

It lasted a lot of years in handling a lot of game generations.

Another milestone achieved – it was the first with native support for DX10 and based on the Tesla microarhitecture and featured the company’s first unified shader architecture ( basically uses a consistent instruction set across all shader types).

Thus With DX10’s arrival, vertex and pixel shaders maintained a large level of common function, so moving to a unified shader arch eliminated a lot of unnecessary duplication of processing blocks.

Better said, the new API was going to help to remove a lot of the CPU limitations compared to DirectX 9 titles by moving more of the graphics processing onto the GPU.

And if this wasn’t enough, it was the first to offer the 3 WAY NVidia SLI option.

NVIDIA introduced SLI – Scalable Link Interface in 2004.

SLI is a technology to combine multiple graphics card into one functional output. SLI allows two, three or four graphics cards combined into a single motherboard to share the rendering workload of the GPUs.

SLI was first introduced by 3dfx in 1998 as Scan-Line Interface (SLI) to be used with dual Voodoo2 cards.

As we know, NVIDIA bought 3dfx and re-introduced SLI Scalable Link Interface.

NVIDIA’s SLI-implementation was first re-introduced with their 6000-series graphics cards to enable dual card operation, and in 2006, they introduced thier first 3-way SLI with the GeForce 8800GTX/Ultra and went further when they eventually released 4-way SLI.

These combined, was the revolution that the G80 brought.

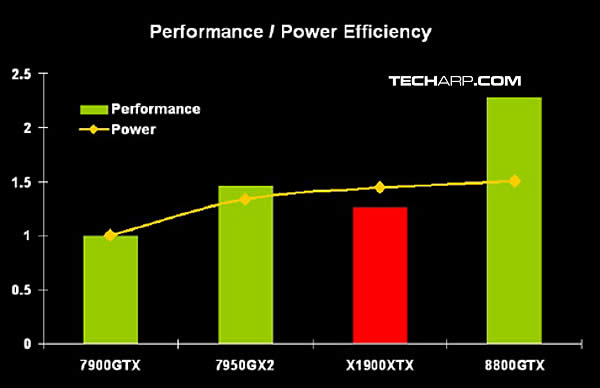

Performance was at least double and upwards of 60-70 % from the previous 7xxx series, it would score higher that anything the competition had even in crossfire: the card performed faster than a single Radeon HD 2900 XT, and faster than 2 Radeon X1950 XTXs in Crossfire or 2 GeForce 7900 GTXs in SLI.

In fact, is was so powerful that it took some caching up until there was a processor on the market that it would make it unleash all of its power.

Hard to imagine we will ever see such leaps in today’s market.

It took four years in development and $475 million to produce the GTX, that had 128 stream processors clocked at 1.35 GHz, a core clock of 575 MHz, and 768 MB of 384-bit GDDR3 memory at 1.8 GHz, giving it a memory bandwidth of 86.4 GB/s.

Also with the new 3 way SLI support.

Of course it needed a lot of power for that time, demanding up to 185 watts and requiring two 6-pin PCI-E power connectors to operate. Made everybody upgrade their power supplies.

The Descendents

On its success NVidia released the following year, on 29 October the G92 – 8800 GT card – that was the first transition to the 65 nm process.

Again, it was another successful product because now with this one they gave the option for an excellent price/performance ratio card in the 200-250 $ mainstream range, delivering almost 80 % of the king 8800 GTX.

Had 112 stream processors clocked at 1.5 GHz, a core clock of 600 MHz, and 512 MB of 256-bit GDDR3 memory at 1.8 GHz, giving it a memory bandwidth of 57.6 GB/s.

Had less hardware, yes, but it’s made up of more transistors (754M vs. 681M). This is partly due to the fact that G92 integrates the updated video processing engine (VP2), and the display engine that previously resided off chip. Now, all the display logic including TMDS hardware is integrated onto the GPU itself.

Then NVidia did another refresh with the 9xxx series that was considered a period of stagnation, and some of the 2xx series we’re based on the 8800 GT. Like the 250 GTS.

Even the Frankenstein experiment, the 9800 GX2 it was made up of 2x 8800 GTSs (the last dual card sandwiched together, everything that followed they’ve put the GPUs on a single card).

The last descendent of the 8800 GTX was the first generation of the 9800 GTX.

So the 8800 GTX was the grandfather to them all.

Also in 2007 they made a special version of the 8800 GTX, the 8800 Ultra.

The same card basically but with higher clocked shaders, core and memory.

NVIDIA later detailed that it was a new stepping that created less heat and thus the higher numbers.

Had the same 128 stream processors were clocked at 1.5 GHz, a core clock of 612 MHz, and 768 MB of 384-bit GDDR3 memory at 2.16 GHz, giving it a memory bandwidth of 103.7 GB/s.

And featured a new cooler design.

Looked great on paper but didn’t deliver that great of improvement over the original GTX, barely ~ 10 % but retailed for an obscene amount at that time of 800-1000 $.

So, it offered very poor value.

And to put everything side by side.

After this recap about her, let’s see how it still performs in 2014.

So we got two of them still kicking and in very good condition, NVIDIA references and we will try to see how they will perform, exactly 8 years of their released.

How we tested:

On HD / 1280 x 720 resolution we started with 1x 8800 GTX and then with 2x 8800 GTX in SLI.

All the quality setting were put in HIGH with some AA and v-sync off.

Our goal was to obtain at least 25-30 fps as an average in all scenarios to agree that the 8800 GTX is still viable for playing online games like slot88.

Got as many demanding and modern video games that still support DX10 (DX11 exclusives like Crysis 3, Hitman Absolution, Shadow of Mordor etc will not work on the 8800 GTX)

– Assassin’s Creed 4 Black Flag

– Tomb Raider

– Battlefield 4

– Far Cry 3

– Sniper Elite 3

– Metro Last Light

– Dreamfall Chapters Book 1

– Sniper Elite 3

Hardware:

CPU: Intel Q6600 Revision G0 @ 2.4 GHZ

CPU Cooler: Prolimatech Megahalems Revision A.

Fan cooler: Noiseblocker 120 MM Black Silent Pro PL-PS PWM 1600 rpm @ 59 CFM

Memory Modules: 2x 2 GB OXCCO DDR2 667 Mhz

Motherboard: XFX 680i SLI

Video cards: 2x NVIDIA Reference 8800 GTX

Case: SilverStone PS-10B

120 mm Case Fans: 1x Silversone; 2x Bitfenix Specters

80 mm Case Fan: 1x Noiseblocker P-P PWM 2000 Rpm @ 32 CFM

Power Supply: Corsair V2 CX600W 40A

Monitor: LCD 19″ Samsung 1280×720

Wall monitor adapter: Prodigit 2000MU

Sound meter: Pyle PSPL01 (positioned 1 meter away from the system)

Software:

Windows 7 Ultimate x64 SP1

GPU-Z v0.7.7

MSI Afterburner v3.00 – Our main input for frame rates and temperature monitoring

NVIDIA 340.52 Drivers – The last official drivers for the 8800 GTX

The Results

After enabling the SLI within the NVIDIA drivers, these is what we came up with:

So, first scenario with only 1x 8800 GTX @ 720p – Everything on HIGH.

Then, SLI with 2x 8800 GTX @ same as above.

Very very interesting !

At 720p with a decent old quad core and not even overclocked ! we managed to tame AAA games like Battlefield 4 (AVG 23 FPS), Tomb Raider (AVG 42 FPS) and Metro LL (AVG 35 FPS) with just one 8800 GTX !

Dreamfall with 40 FPS AVG yet another success

Again, these were all on HIGH settings.

In SLI things got even better and we put Assassin’s Creed under its belt (AVG 38 FPS from 17), then Metro LL (AVG 49 FPS), Tomb Raider was just ridiculous (with 60 FPS AVG)

And Sniper Elite (AVG to 30 FPS from 20).

But with SLI, Battlefield 4 didn’t even start, we understand that there is a well known problem and there’s a work-around and when we do it, we will update the results.

Ethan Carter didn’t show any improvements in SLI, pointing out that there are a lot of variables and risks using a SLI configuration.

And Far Cry 3 froze every time we entered the game and couldn’t continue.

All in all, we are pretty impressed regarding these results in triple-A games from 2013 and 2014 with a video card back from 2006.

* 06.01.2017 Update

As promised let’s try these ancient power in full HD resolution. Everything set to the maximum quality settings again.

– Assassin’s Creed 4 Black Flag

– Battlefield 4

– Crysis 2

– Metro Last Light

– Sniper Elite 3

– SpecOps The line

– Tomb Raider

One single 8800 GTX at max settings in 1080p in some newer titles is just a parody. Games are just too new and the video card is just too old/under powered to handle them.

But in some better optimized games like Tomb Raider and Specs Ops the results are excellent.

Moving to a SLI of 8800 GTX at max in 1080p we can paint another picture. Some of the games will not even start. No more driver optimization or support. Then when they did work, in AC4BF for example it made no difference.

But in Metro LL, SpecOps and Tomb Raider especially, the results are perfect ! Another pleasant surprise.

All in all these updated results will not affect our conclusion.

Temperature

Recorded room temperature: 22° C

GPUs in idle: 50° C

GPUs in load: 60-65° C with a 2° C difference between the two cards.

GPUs in load, max recorded temperature: 68 with 70° C on the lower one.

A card that was notorious for high temperature, we can disagree here even with our SLI.

And we barely have airflow in the case for the video cards, all the fans help the CPU.

Not to mention, these are not on their first youth.

So again, excellent results.

Noise

Again, notorious for being also loud, here we will agree slightly because with 2 cards the noise will add up, our meter was showing 38-40 dB/a in our gaming sessions.

Not intruding but it was there.

Then in idle they were pretty much silent.

Power Consumption

The wall meter was showing in full load 423 W for a total system consumption.

And in idle ~ 227 W.

A lot by today’s standards, where with an i7 and a GTX 980 you will not even break 300W under load and not to mention idle.

Conclusion

So as you can see, even with today’s high demanding games, you can still play them at respectable settings with very decent frames per seconds at 720p with only one 8800 GTX !

I think this says it all.

With SLI then things became even smoother.

This is amazing for an 8 year old video card !

We are so enthusiastic about these results that we will do a follow up where we will test them at a full HD resolution / 1920 x 1080.

Maybe go crazy and get another one and do a menage a trois !

Of course, the general multi-GPU negatives are present like extra heat and consumption, headaches with drivers, something like a 260 GTX will beat.

But that’s not the point, something this old (and very cheap) can still throw a punch with very honorable and impressive results.

If you are passionate and sometimes melancholia strikes you, like it did to us, this is one of the best options.

We got them dirt cheap and really enjoyed testing them.

It’s safe to say this GTX revolutionized what people expected from a graphics card and made a great impact from its birth with incredible performance over the generation that it replaced, up until so many ears later.

We still hope for periods like this to happen again, sadly, we don’t think it will be that close.

Thus, 8800 GTX, we salute you !